Why social media bans won’t save our kids

Vendan Ananda Kumararajah

- Published

- Opinion & Analysis

Politicians are rushing to block under-16s from social platforms, but the danger runs much deeper than screen time or teenage scrolling, warns Vendan Ananda Kumararajah. The real threat lies in systems built for profit, not childhood, and only a redesign of the platforms themselves will make the online world genuinely safe for young people

Australia’s new under-16 social media restrictions came into force this week, and new UK research shows that more than half of British adults would support a similar ban. The instinct is understandable. Parents feel outmatched by opaque platforms that shape their children’s lives, while trust in tech firms is eroding rapidly. Only 13% of UK adults say they trust social media platforms with biometric data — a strikingly low figure in an era where age-estimation technology is becoming central to online safety laws.

But beneath the headlines lies a deeper systemic dilemma. Children increasingly need to be online to learn, socialise, and participate in digital culture. At the same time, parents perceive that the environments they inhabit have been engineered for commercial optimisation rather than developmental wellbeing. This is not a contradiction that can be solved through bans alone. It is a symptom of a governance architecture that has failed to evolve.

The modern social platform is a machine optimised for attention, growth, and data extraction. This is not a moral failing of individual companies; it is a structural feature of the system they operate within. The incentives that drive platform behaviour do not naturally align with the safety, dignity, or developmental integrity of young users.

This is why even well-intentioned regulations often fall short. Age-verification requirements are expanding globally, yet public trust remains low. Most adults support age checks in principle, but only when the mechanisms are privacy-preserving and transparent. Without such guarantees, age assurance becomes another point of anxiety in an already polarised debate.

What parents are expressing, often without the vocabulary for it, is a profound discomfort with systems that lack ethical accountability. They want more than content filters or parental controls. They want to know that the deeper machinery of the digital world recognises childhood as something uniquely worth protecting.

If society reaches the point where banning under-16s from social media appears to be the most viable option, it reveals something important: namely, that we have not built digital environments fit for children in the first place. A ban may create breathing room, but it avoids the harder question of what it would take for online spaces to be inherently safe for the young rather than selectively restrictive. Surface fixes such as content moderation, reporting tools, or even biometric age checks operate at the periphery of the system. The real issues are structural, and structural problems require architectural solutions.

To move beyond bans and surface interventions, social platforms must adopt a governance architecture that examines how decisions are made, how incentives shape behaviour, and how ethical constraints are embedded throughout the system. This requires three interlocking layers.

- Ethical Viability — ensuring systems remain aligned with child wellbeing.

Before deployment, platforms should be required to demonstrate ethical viability: whether the system’s design, algorithms, and data flows support or undermine children’s developmental needs. This involves evaluating how recommendation systems handle vulnerability, identifying algorithmic pathways that push minors toward harmful content, and stress-testing whether platform design encourages agency or dependence. This is not a moral appeal; it is a governance expectation. Just as aviation systems must prove safety before flight, digital platforms should prove ethical fitness before use. - Distortion Tracking — monitoring algorithmic drift in real time.

Even well-designed systems degrade when incentives distort their behaviour. A governance architecture must therefore track systemic drift continuously, observing when algorithms shift toward addictive engagement patterns, when content ecosystems polarise or sensationalise, when commercial pressures begin to override safety constraints, and when subtle forms of exploitation emerge through feedback loops. The problem today is that platforms tend to intervene only after public outcry. Architectural governance demands that distortion be detected and corrected before harm scales. - Legitimate Agency — enabling age-appropriate participation.

The final layer focuses on creating ways for young users to participate safely and meaningfully, with boundaries that align with their developmental stage. This includes designing curated digital ecosystems for younger users, developing transparent and privacy-first on-device age estimation, providing mechanisms that give guardians clarity without invading autonomy, establishing accountability trails regulators can audit, and creating pathways that expand as children mature. Agency is not exposure; it is the capacity to navigate environments built with developmental integrity in mind.

This architectural model moves safety from reaction to design. Instead of relying on bans, moderation after harm, PR-driven fixes, or crisis response, platforms would operate on ethical design principles, real-time drift correction, transparent and enforceable governance, and environments shaped around how children grow. This approach operationalises child safety at the level where harm originates: in system behaviour, not user behaviour.

The model dissolves the central contradiction between the need to protect children and the need for them to be online to learn and develop. With ethical viability, distortion tracking, and legitimate agency in place, children can participate without being thrust into adult-tier environments. Platforms can verify age without harvesting biometrics. Regulators gain accountability without invasive data demands. Parents regain trust because the system itself enforces boundaries. Platforms continue to innovate and grow through differentiated, safe ecosystems. The conversation shifts from control to capability — from excluding children to designing a digital world worthy of them.

New research shows that public support for facial age estimation triples when images never leave the device. This reveals something essential: the public is not rejecting age assurance; they are rejecting systems that treat safety as synonymous with surveillance. Architectural governance makes privacy-preserving age assurance one part of a broader ethical system rather than a lone tool or political slogan.

The debate about under-16 bans reflects a public searching for certainty in systems that currently offer very little of it. The real opportunity lies in redesigning the architectures of platforms and governance so that safety is structural, privacy is respected, agency is nurtured, trust is possible, and childhood is not collateral damage in the race for engagement. The question is not whether children should be online. They already are, and they will be. The question is whether we can build digital systems worthy of their presence.

Vendan Ananda Kumararajah is an internationally recognised transformation architect and systems thinker. The originator of the A3 Model—a new-order cybernetic framework uniting ethics, distortion awareness, and agency in AI and governance—he bridges ancient Tamil philosophy with contemporary systems science. A Member of the Chartered Management Institute and author of Navigating Complexity and System Challenges: Foundations for the A3 Model (2025), Vendan is redefining how intelligence, governance, and ethics interconnect in an age of autonomous technologies.

READ MORE: ‘Facebook’s job ads ruling opens a new era of accountability for artificial intelligence‘. France’s equality watchdog has ruled that Facebook’s job-advertising algorithm engaged in indirect gender discrimination, a finding that for the first time treats bias in code as a breach of equality law. Here, Vendan Kumararajah examines a precedent that extends accountability from human decision-makers to machine systems — a shift with major implications for recruitment, healthcare, and finance, where algorithms now shape access to work, credit, and care.

Do you have news to share or expertise to contribute? The European welcomes insights from business leaders and sector specialists. Get in touch with our editorial team to find out more.

Main image: Kampus Production/Pexels

RECENT ARTICLES

-

Europe cannot call itself ‘equal’ while disabled citizens are still fighting for access

Europe cannot call itself ‘equal’ while disabled citizens are still fighting for access -

Is Europe regulating the future or forgetting to build it? The hidden flaw in digital sovereignty

Is Europe regulating the future or forgetting to build it? The hidden flaw in digital sovereignty -

The era of easy markets is ending — here are the risks investors can no longer ignore

The era of easy markets is ending — here are the risks investors can no longer ignore -

Is testosterone the new performance hack for executives?

Is testosterone the new performance hack for executives? -

Can we regulate reality? AI, sovereignty and the battle over what counts as real

Can we regulate reality? AI, sovereignty and the battle over what counts as real -

NATO gears up for conflict as transatlantic strains grow

NATO gears up for conflict as transatlantic strains grow -

Facial recognition is leaving the US border — and we should be concerned

Facial recognition is leaving the US border — and we should be concerned -

Wheelchair design is stuck in the past — and disabled people are paying the price

Wheelchair design is stuck in the past — and disabled people are paying the price -

Why Europe still needs America

Why Europe still needs America -

Why Europe’s finance apps must start borrowing from each other’s playbooks

Why Europe’s finance apps must start borrowing from each other’s playbooks -

Why universities must set clear rules for AI use before trust in academia erodes

Why universities must set clear rules for AI use before trust in academia erodes -

The lucky leader: six lessons on why fortune favours some and fails others

The lucky leader: six lessons on why fortune favours some and fails others -

Reckon AI has cracked thinking? Think again

Reckon AI has cracked thinking? Think again -

The new 10 year National Cancer Plan: fewer measures, more heart?

The new 10 year National Cancer Plan: fewer measures, more heart? -

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing -

The legality of tax planning in an age of moral outrage

The legality of tax planning in an age of moral outrage -

The limits of good intentions in public policy

The limits of good intentions in public policy -

Are favouritism and fear holding back Germany’s rearmament?

Are favouritism and fear holding back Germany’s rearmament? -

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth -

Why mere survival is no longer enough for children with brain tumours

Why mere survival is no longer enough for children with brain tumours -

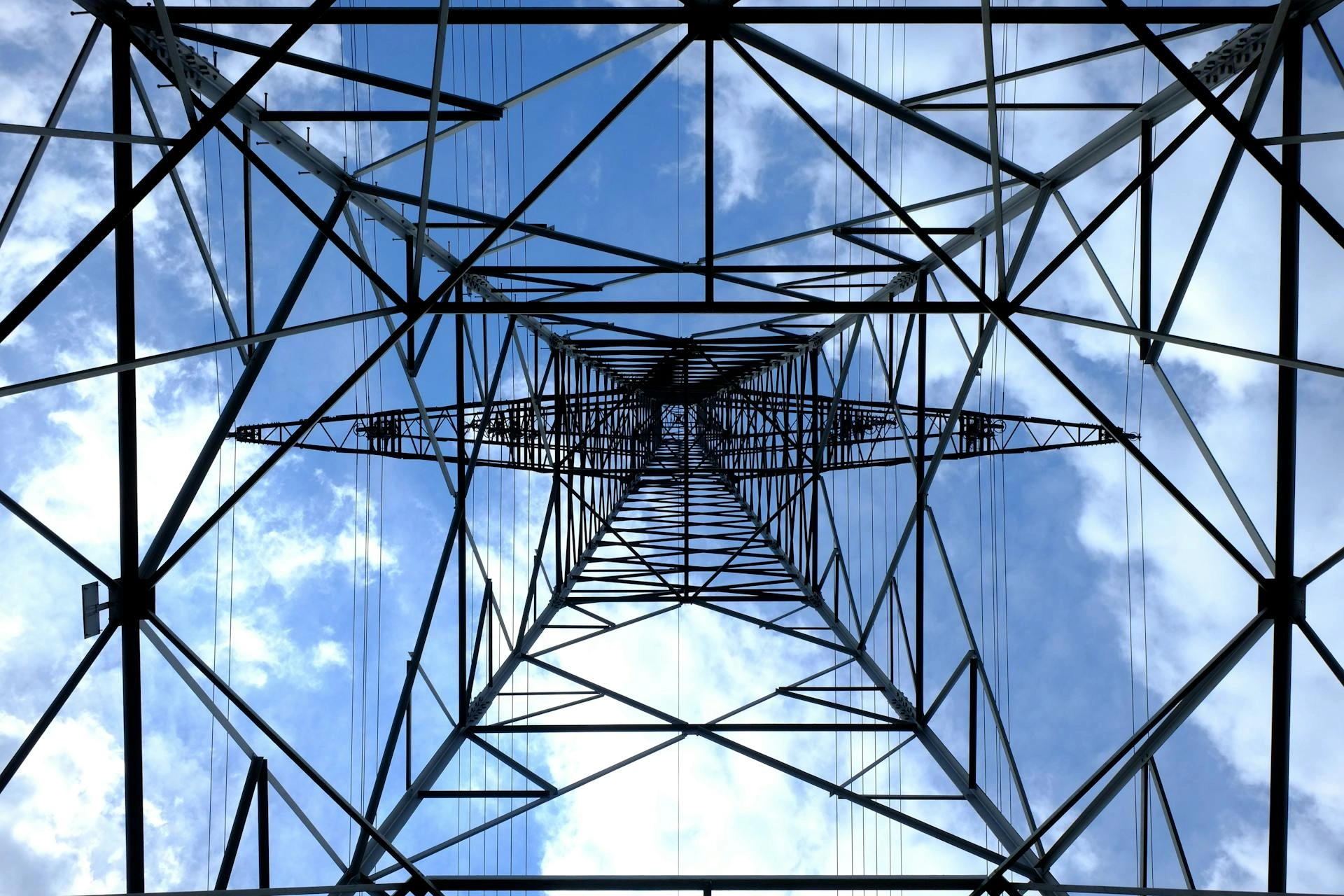

What Germany’s Energiewende teaches Europe about power, risk and reality

What Germany’s Energiewende teaches Europe about power, risk and reality -

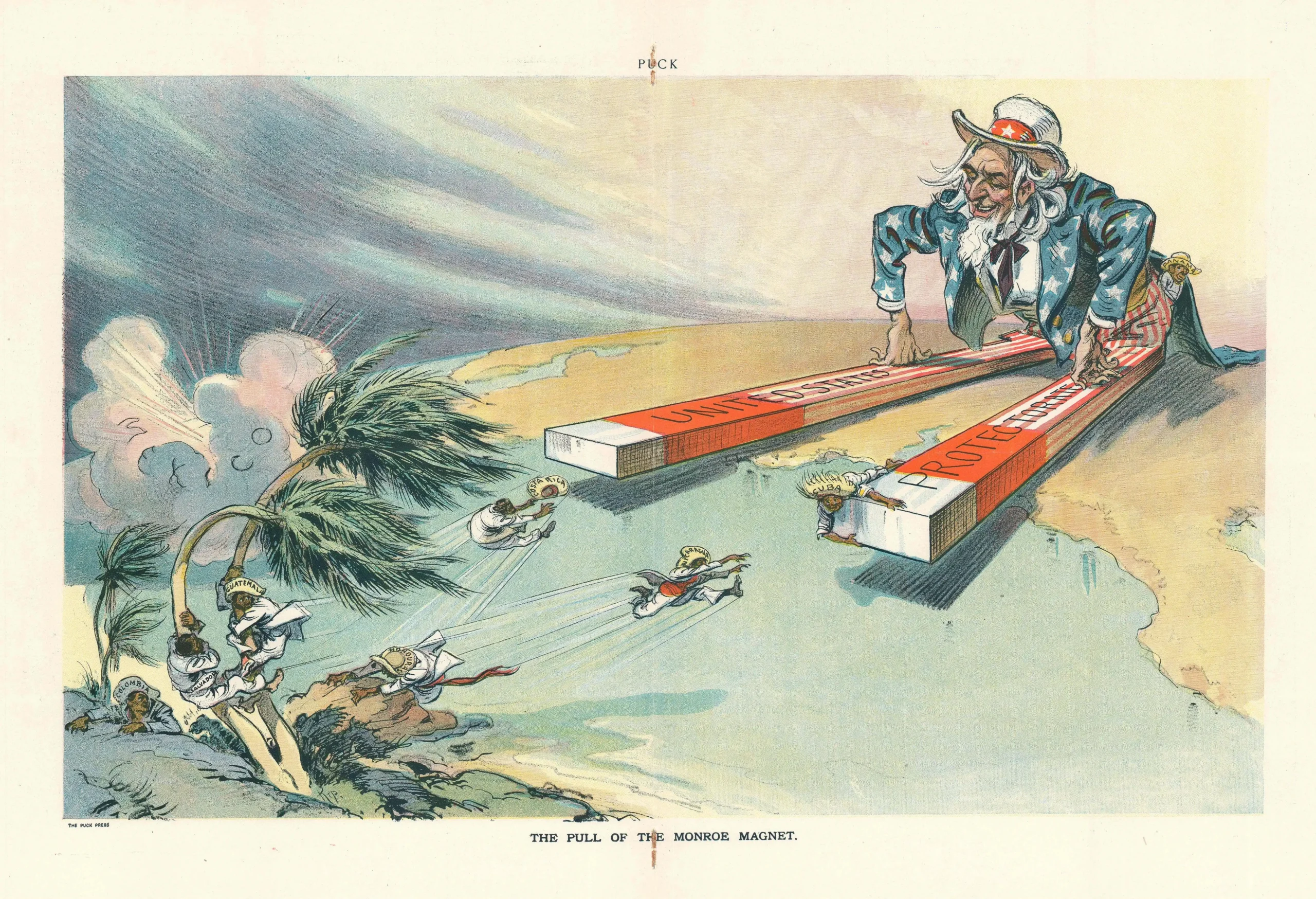

What the Monroe Doctrine actually said — and why Trump is invoking it now

What the Monroe Doctrine actually said — and why Trump is invoking it now -

Love with responsibility: rethinking supply chains this Valentine’s Day

Love with responsibility: rethinking supply chains this Valentine’s Day -

Why the India–EU trade deal matters far beyond diplomacy

Why the India–EU trade deal matters far beyond diplomacy -

Why the countryside is far safer than we think - and why apex predators belong in it

Why the countryside is far safer than we think - and why apex predators belong in it