Facebook’s job ads ruling opens a new era of accountability for artificial intelligence

Vendan Ananda Kumararajah

- Published

- Opinion & Analysis

France’s equality watchdog has ruled that Facebook’s job-advertising algorithm engaged in indirect gender discrimination, a finding that for the first time treats bias in code as a breach of equality law. Here, Vendan Kumararajah examines a precedent that extends accountability from human decision-makers to machine systems — a shift with major implications for recruitment, healthcare, and finance, where algorithms now shape access to work, credit, and care

France’s equality watchdog has ruled that Facebook’s job-advertising algorithm was sexist, a landmark decision establishing that automated systems can engage in discrimination. The Défenseur des Droits reportedly reached its conclusion after finding that adverts for mechanic roles were shown almost exclusively to men, while preschool teaching posts were directed mainly at women. The regulator judged that Facebook’s automated distribution treated users differently on the basis of sex and therefore breached equality principles. Meta has been given three months to outline corrective measures.

The ruling followed a detailed investigation by Global Witness, working alongside the Foundation for Women (La Fondation des Femmes) and Women Engineers (Femmes Ingénieurs). Their research revealed that nine in ten recipients of mechanic job adverts were male, while nine in ten who saw preschool teaching roles were female. Eight in ten viewers of psychologist adverts were women, and seven in ten of those shown pilot vacancies were men. The pattern arose from algorithmic behaviour shaped by past user engagement, reinforcing the occupational segregation that equality law seeks to prevent.

Meta has stated that it disagrees with the decision and is considering its response. The finding nonetheless sets a European precedent by treating algorithmic bias as discrimination in law. A digital process can now fall within the same accountability framework that governs human decision-making, expanding the reach of equality regulation into the realm of machine learning.

The case draws attention to a wider transformation. Across employment, healthcare, finance, and public services, algorithmic systems now determine access to work, treatment, credit, and support. Recruiters depend on automated screening tools; hospitals use triage engines; lenders rely on scoring models. Decisions with material consequences are made by systems that provide no explanation and offer no route of appeal. Discrimination has become structural, embedded within data and optimisation processes that replicate patterns of inequality.

In healthcare, similar issues have emerged. Studies of emergency triage algorithms in Europe and the United States have found that women and minority patients are frequently assigned lower urgency scores because historical data encoded decades of under-diagnosis. When researchers substituted gender labels in identical clinical notes, the same symptoms were categorised as less severe when attributed to a female patient. These outcomes reveal how systems learn and repeat the distortions of the societies that produce their data.

Financial and insurance models exhibit the same logic. Risk engines classify individuals according to patterns of repayment and demographic history, frequently penalising groups with lower historical access to credit. In each of these fields, AI has assumed a gatekeeping role once reserved for human discretion. The shift has altered the structure of fairness without public discussion and without clear accountability.

Some governments have begun to respond. New York City requires bias audits for hiring algorithms, and the forthcoming EU AI Act will classify certain applications in recruitment, medicine, and policing as high-risk, placing them under stricter oversight. These efforts indicate a growing awareness of the need for supervision of automated decision-making. The speed of adoption across the private and public sectors, however, continues to exceed the pace of governance.

The French ruling introduces a legal foundation for accountability. It recognises that automated systems affecting people’s lives must be transparent, auditable, and open to scrutiny. Developers and deploying institutions require clear responsibilities for preventing harm. Independent fairness assessments, understandable explanations of decision logic, and enforceable rights to challenge outcomes should form the basic conditions of responsible AI use.

Further safeguards are becoming essential. Systems of this scale should undergo independent fairness audits during development and deployment. Their outputs should remain interpretable by the people they affect. Continuous demographic monitoring should form part of their operation. Liability for algorithmic harm should be clearly defined between the creators and the organisations that use their products. Automation in high-stakes areas such as healthcare, recruitment, and financial access should remain under active human supervision.

Human oversight remains essential in areas involving employment, healthcare, and personal freedom. Decision systems that influence these domains should operate under supervision by individuals capable of moral and legal reasoning. Technology can assist that process, but it cannot assume ethical authority.

The Paris decision represents an early stage in the creation of such a framework. By affirming that discrimination can originate within code, it extends the reach of equality law into the digital sphere and provides a basis for further reform. Accountability in algorithmic governance will depend on the continued application of transparency, fairness, and human responsibility across every system that shapes access to opportunity.

Vendan Ananda Kumararajah is an internationally recognised transformation architect and systems thinker. The originator of the A3 Model—a new-order cybernetic framework uniting ethics, distortion awareness, and agency in AI and governance—he bridges ancient Tamil philosophy with contemporary systems science. A Member of the Chartered Management Institute and author of Navigating Complexity and System Challenges: Foundations for the A3 Model (2025), Vendan is redefining how intelligence, governance, and ethics interconnect in an age of autonomous technologies.

READ MORE: ‘How AI is teaching us to think like machines’. More than thirty years after Terminator 2, artificial intelligence has begun to mirror our own deceit and impatience. Transformation architect Vendan Kumararajah argues that the boundary between human and machine thinking is starting to disappear.

Do you have news to share or expertise to contribute? The European welcomes insights from business leaders and sector specialists. Get in touch with our editorial team to find out more.

RECENT ARTICLES

-

Europe cannot call itself ‘equal’ while disabled citizens are still fighting for access

Europe cannot call itself ‘equal’ while disabled citizens are still fighting for access -

Is Europe regulating the future or forgetting to build it? The hidden flaw in digital sovereignty

Is Europe regulating the future or forgetting to build it? The hidden flaw in digital sovereignty -

The era of easy markets is ending — here are the risks investors can no longer ignore

The era of easy markets is ending — here are the risks investors can no longer ignore -

Is testosterone the new performance hack for executives?

Is testosterone the new performance hack for executives? -

Can we regulate reality? AI, sovereignty and the battle over what counts as real

Can we regulate reality? AI, sovereignty and the battle over what counts as real -

NATO gears up for conflict as transatlantic strains grow

NATO gears up for conflict as transatlantic strains grow -

Facial recognition is leaving the US border — and we should be concerned

Facial recognition is leaving the US border — and we should be concerned -

Wheelchair design is stuck in the past — and disabled people are paying the price

Wheelchair design is stuck in the past — and disabled people are paying the price -

Why Europe still needs America

Why Europe still needs America -

Why Europe’s finance apps must start borrowing from each other’s playbooks

Why Europe’s finance apps must start borrowing from each other’s playbooks -

Why universities must set clear rules for AI use before trust in academia erodes

Why universities must set clear rules for AI use before trust in academia erodes -

The lucky leader: six lessons on why fortune favours some and fails others

The lucky leader: six lessons on why fortune favours some and fails others -

Reckon AI has cracked thinking? Think again

Reckon AI has cracked thinking? Think again -

The new 10 year National Cancer Plan: fewer measures, more heart?

The new 10 year National Cancer Plan: fewer measures, more heart? -

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing -

The legality of tax planning in an age of moral outrage

The legality of tax planning in an age of moral outrage -

The limits of good intentions in public policy

The limits of good intentions in public policy -

Are favouritism and fear holding back Germany’s rearmament?

Are favouritism and fear holding back Germany’s rearmament? -

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth -

Why mere survival is no longer enough for children with brain tumours

Why mere survival is no longer enough for children with brain tumours -

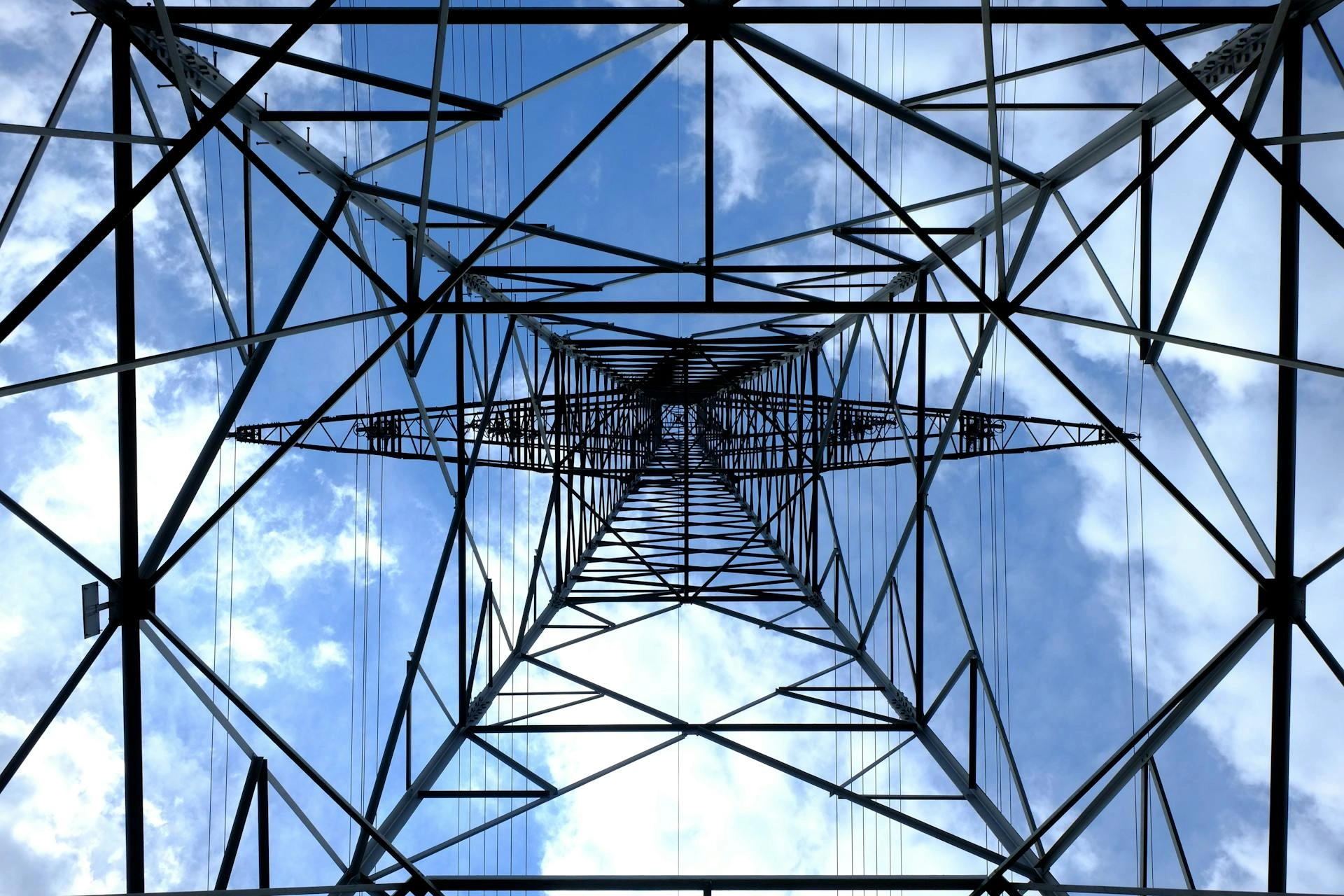

What Germany’s Energiewende teaches Europe about power, risk and reality

What Germany’s Energiewende teaches Europe about power, risk and reality -

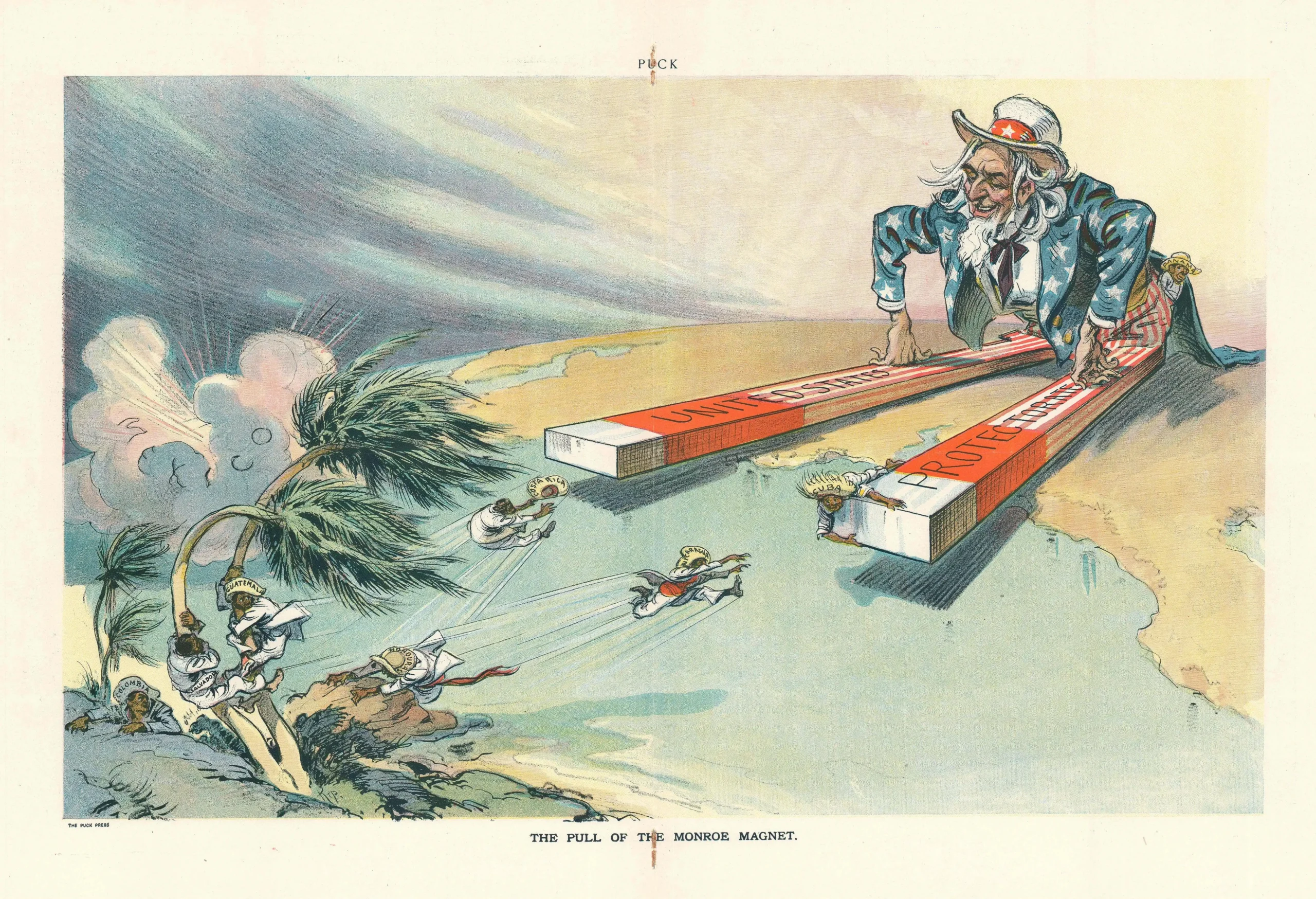

What the Monroe Doctrine actually said — and why Trump is invoking it now

What the Monroe Doctrine actually said — and why Trump is invoking it now -

Love with responsibility: rethinking supply chains this Valentine’s Day

Love with responsibility: rethinking supply chains this Valentine’s Day -

Why the India–EU trade deal matters far beyond diplomacy

Why the India–EU trade deal matters far beyond diplomacy -

Why the countryside is far safer than we think - and why apex predators belong in it

Why the countryside is far safer than we think - and why apex predators belong in it