The AI clause: why a Hollywood-style strike in the U.S games industry may redefine automation rights worldwide

Aleksey Savchenko

- Published

- Opinion & Analysis

A landmark labour dispute in the U.S games industry has just ended with new legal limits on how AI can replicate human voices. Aleksey Savchenko explores how the outcome could influence automation policy, intellectual property law and creative rights across Europe

Earlier this summer, the American union representing actors, performers and voice artists – known as SAG-AFTRA (the Screen Actors Guild – American Federation of Television and Radio Artists) – concluded a long and bitter dispute with the largest video game publishers in the world. Few outside the industry paid close attention. But they should have.

The agreement struck at the core of a growing global debate: who owns your likeness, your voice, or your performance – and can it be used by machines without your permission?

In a landmark deal, SAG-AFTRA secured a clause requiring “explicit consent and transparency” before any company can digitally replicate a performer’s voice or image using artificial intelligence (AI). For the games industry, where hundreds of characters in blockbuster titles rely on professional voice work, it was a seismic development. For the wider technology sector, especially in Europe, where AI regulation is accelerating, it may well set a tone.

What began as a labour action over residual payments and working conditions became a legal and ethical line in the sand about how AI technologies, particularly generative AI and machine learning (ML) should be used in commercial production.

And while this dispute played out in North America, its implications are global. In Europe, where the AI Act is entering into force, and where questions of digital rights and creative ownership are under legislative scrutiny, the outcome offers a live case study in what happens when real people, not algorithms, push back.

SAG-AFTRA began its negotiations with video game publishers, including Activision Blizzard, Electronic Arts and Take-Two Interactive, in late 2023. At issue were the terms under which performers’ voices and physical performances were used in-game, particularly as the use of AI tools in production pipelines began to increase.

The union’s concern centred on AI voice synthesis, technologies that can generate lifelike speech based on samples of a performer’s voice. These models, often powered by machine learning algorithms trained on large volumes of recorded material, can recreate tone, pacing and inflection with astonishing accuracy.

Some publishers were exploring ways to use these systems to produce additional dialogue or vocal performances without rehiring the original actor – a potentially vast cost-saving move, but one that raised major ethical and legal concerns. In many cases, performers had not granted consent for their voices to be used in this way. Worse, some had no idea their recorded performances were being used to train AI models at all.

For voice actors, many of whom work freelance or on short-term contracts, the fear was existential: if a game studio could replicate your voice from past work, what’s to stop it from never hiring you again?

Months of negotiation followed, during which broader tensions about the role of AI in creative industries rose to the fore. As with the writers’ and actors’ strikes in Hollywood the year before, the central issue was not only about jobs but ownership and control over your digital self, and the right to say no.

Last week, SAG-AFTRA declared victory on its most important demand: no AI replicas without permission.

It was the first time a major digital production sector had codified this protection in a formal labour agreement. And it raises a much bigger question, one now facing creative and technology businesses across Europe and beyond: where do AI tools belong — and where don’t they?

The term “artificial intelligence” has become something of a catch-all, used as it is to describe everything from machine-generated imagery and chatbot assistants to search optimisation and predictive analytics. But in the context of the SAG-AFTRA dispute and its wider implications for digital production, we are mostly talking about a specific subset of AI: generative models.

At the heart of the debate are systems built using machine learning, a form of computer programming that enables software to detect patterns and generate outputs based on vast quantities of data, rather than being directly coded for each task. These systems do not think, reason or understand in the human sense. Instead, they are programmed to calculate probabilities based on examples they have been exposed to.

Among the most prominent examples are large language models, or LLMs — machine learning systems trained on huge datasets of written and spoken material, enabling them to generate human-like responses, simulate dialogue, or summarise information in natural language. These are the engines behind many generative AI tools currently on the market.

In the context of the games industry, a particularly contentious application is AI voice synthesis, where a performer’s recorded voice is used to train a model capable of generating entirely new lines of dialogue, mimicking their tone, pacing and emotional delivery. These systems can convincingly simulate speech using a person’s voice profile, and have advanced rapidly in recent years.

The issue is not whether this technology works. In many cases, it works well enough to be indistinguishable from the real thing. The issue at stake is ownership; that is to say, who controls the original data, who benefits from the synthetic output, and whether the person being replicated has any say in the matter.

This is where the legal frameworks, especially around consent, copyright, and data rights, begin to fray.

In most territories, including the U.S and much of Europe, the law is still playing catch-up. Performers, writers and artists are increasingly discovering that their past contributions are now fuelling new products without credit or compensation.

That, in essence, is what the SAG-AFTRA strike was about.

But this isn’t just a question of labour rights. It goes to the heart of what AI is being used for and who is really benefiting from its deployment at scale.

The success of generative AI owes as much to marketing as it does to mathematics. Silicon Valley has learned to tell two stories: one for the public, and one for investors.

For consumers, AI is sold as a convenience; tools that save time, extend access, offer new ways to learn, communicate or create. It promises personal empowerment with less effort and more output.

For investors and governments, meanwhile, it sells something else entirely: automation. Less labour, more productivity. Lower costs. Reduced friction. And in the case of government clients, especially in surveillance, defence, and civil service delivery, it’s also a promise of control.

The danger is that both stories are oversold. While generative AI has legitimate value in certain fields such as data processing, research, language translation, and procedural modelling, its more radical claims are often underpinned by vague definitions, weak regulation and hype-fuelled funding rounds.

The technology, in other words, works but not nearly as broadly, accurately, or safely as its evangelists suggest.

This is particularly relevant to the video games industry, a sector that, in terms of complexity and interdisciplinary design, is among the hardest environments in which to deploy AI tools responsibly.

The idea that games could be “made by AI” has become a popular talking point. In practice, the reality is far more limited and far more revealing.

Modern video games are legally complex, collaborative, multimedia software products. Everything from the characters’ dialogue to the music, artwork, animation, codebase and underlying physics systems is usually governed by contracts, licensing agreements or proprietary tools. Almost none of this can legally be replicated by AI models trained on internet-scale datasets without major risks around intellectual property.

Where AI has found some traction in game development is in narrow, production-level use cases. Concept teams, for instance, have started using generative tools to produce quick mock-ups or stylistic references during early visual exploration. In quality assurance, some studios have adopted machine learning systems to assist in bug reporting and pattern detection, speeding up what is typically a labour-intensive process. Localisation pipelines, often under tight time and budget constraints, have experimented with AI-generated placeholder voice lines or rough machine translations to bridge the gap before human refinement.

And in the more technical areas of production, such as building game versions, managing asset libraries, or handling repetitive integration tasks, automation has offered modest efficiencies when used under close supervision.

Even then, these implementations are often highly compartmentalised, require trained oversight, and come with significant editing overhead. Rather than replacing jobs, they merely assist professionals who already know the process.

Such automation offers real and tangible gains: productivity boosts of 20–30% in specific areas have been reported in some studios. But that only holds if teams are experienced and the tools are carefully integrated. The risk is that AI tools, when misapplied, simply create more work downstream, not less.

One of the clearest lessons from decades of game development is that great games don’t emerge from technical perfection alone but succeed because someone (or more usually a group of people) had a vision.

That vision is often flawed, messy, personal. It draws on memory, emotion, instinct. It changes in response to team input. And most crucially, it evolves over time, often across hundreds of iterative builds and thousands of human decisions.

AI tools are not equipped for that kind of conceptual authorship. They are not designers. They are not storytellers. They don’t have taste, context, or intuition. At best, they can simulate structure. At worst, they strip away originality in favour of average output.

This is why most successful studios treat AI as a set of limited-use utilities, not as replacements for creative leadership. The idea that a video game, with all its interlocking systems and player psychology, could be “generated” in any meaningful sense is, for now, deeply unrealistic.

Despite all this, AI does have a role to play in interactive entertainment, but only if it’s reframed properly. Instead of seeking to replace talent, the best studios are looking to augment it. That means using machine learning in more strategic and system-oriented ways.

In large-scale open-world games, for example, developers are exploring how simulation systems – from weather patterns to crowd behaviour – can be managed more efficiently through AI-driven modelling. Non-player characters, or NPCs, might behave in more complex and believable ways using behaviour models informed by real-time data. In live-service games, where developers rely heavily on player feedback and behavioural metrics, machine learning is being tested as a tool to help interpret that data and adjust gameplay dynamically to improve the player experience. Some studios are also experimenting with procedural storytelling by designing systems that respond to player choices and simulate in-game consequences within a structured framework.

Such work is still largely in the research phase and depends on close coordination between designers, programmers and narrative teams. It needs new design thinking, new pipelines, and new legal frameworks, especially around IP rights, data ownership, and creator compensation.

The next five years will be critical in determining whether AI in games becomes a collaborative engine or just another shortcut that compromises the integrity of the product. As the European Union’s AI Act begins to reshape how generative systems can be developed and deployed, the games industry (and indeed all creative sectors) face the same central dilemma: How do we ensure that technology serves people, not the other way around?

Aleksey Savchenko is a veteran game developer, futurist, author, and BAFTA member with nearly three decades’ expertise in the tech and entertainment industries. Currently the Director of RnD, Technology and External Resources at GSC Game World, he has worked on the studio’s acclaimed S.T.A.L.K.E.R. 2. He has also worked for Epic Games, known for Fortnite and its technical achievements in middleware technologies worldwide, playing an instrumental role in establishing an Unreal Engine with Eastern European developers. He is the author of Game as Business and the Cyberside series of cyberpunk graphic novels.

Main picture: Victoria/Pixabay

RECENT ARTICLES

-

The era of easy markets is ending — here are the risks investors can no longer ignore

The era of easy markets is ending — here are the risks investors can no longer ignore -

Is testosterone the new performance hack for executives?

Is testosterone the new performance hack for executives? -

Can we regulate reality? AI, sovereignty and the battle over what counts as real

Can we regulate reality? AI, sovereignty and the battle over what counts as real -

NATO gears up for conflict as transatlantic strains grow

NATO gears up for conflict as transatlantic strains grow -

Facial recognition is leaving the US border — and we should be concerned

Facial recognition is leaving the US border — and we should be concerned -

Wheelchair design is stuck in the past — and disabled people are paying the price

Wheelchair design is stuck in the past — and disabled people are paying the price -

Why Europe still needs America

Why Europe still needs America -

Why Europe’s finance apps must start borrowing from each other’s playbooks

Why Europe’s finance apps must start borrowing from each other’s playbooks -

Why universities must set clear rules for AI use before trust in academia erodes

Why universities must set clear rules for AI use before trust in academia erodes -

The lucky leader: six lessons on why fortune favours some and fails others

The lucky leader: six lessons on why fortune favours some and fails others -

Reckon AI has cracked thinking? Think again

Reckon AI has cracked thinking? Think again -

The new 10 year National Cancer Plan: fewer measures, more heart?

The new 10 year National Cancer Plan: fewer measures, more heart? -

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing -

The legality of tax planning in an age of moral outrage

The legality of tax planning in an age of moral outrage -

The limits of good intentions in public policy

The limits of good intentions in public policy -

Are favouritism and fear holding back Germany’s rearmament?

Are favouritism and fear holding back Germany’s rearmament? -

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth -

Why mere survival is no longer enough for children with brain tumours

Why mere survival is no longer enough for children with brain tumours -

What Germany’s Energiewende teaches Europe about power, risk and reality

What Germany’s Energiewende teaches Europe about power, risk and reality -

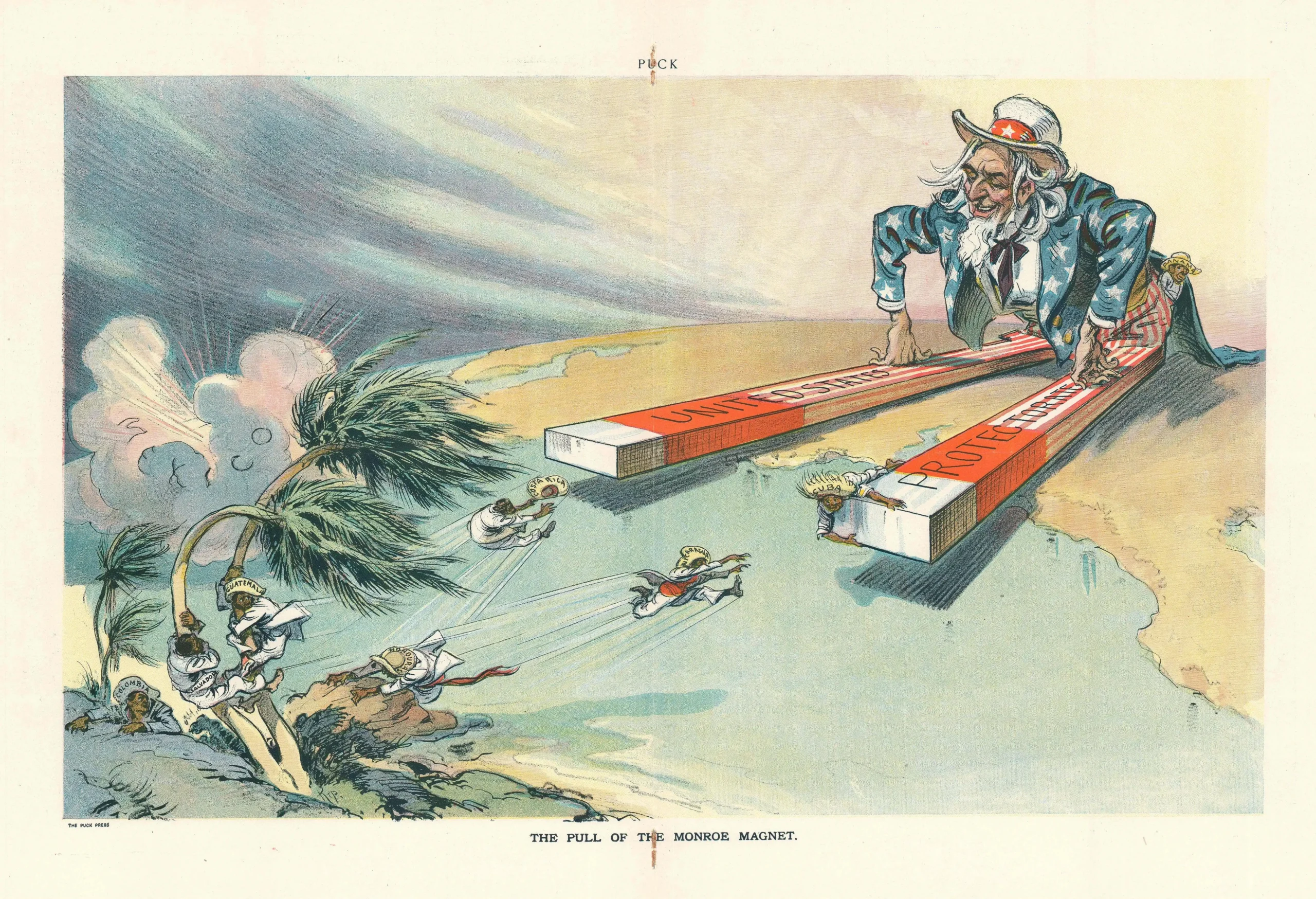

What the Monroe Doctrine actually said — and why Trump is invoking it now

What the Monroe Doctrine actually said — and why Trump is invoking it now -

Love with responsibility: rethinking supply chains this Valentine’s Day

Love with responsibility: rethinking supply chains this Valentine’s Day -

Why the India–EU trade deal matters far beyond diplomacy

Why the India–EU trade deal matters far beyond diplomacy -

Why the countryside is far safer than we think - and why apex predators belong in it

Why the countryside is far safer than we think - and why apex predators belong in it -

What if he falls?

What if he falls? -

Trump reminds Davos that talk still runs the world

Trump reminds Davos that talk still runs the world