House of Lords blocks AI copyright grab—But is the fight over?

Michael Leidig

- Published

- Artificial Intelligence, Opinion & Analysis

Ongoing attempts to expand copyright exemptions for AI training will cripple original content creators and grassroots media organisations, warns Mike Leidig

In 2022, the UK government proposed expanding the text and data mining (TDM) exception to copyright law, allowing artificial intelligence (AI) developers to harvest editorial content and other original works without permission or payment. The aim was to enhance large language model (LLM) training and, with it, the UK’s AI competitiveness.

Text and data mining involves the automated analysis of large volumes of digital content to identify patterns, trends, and insights. AI companies rely on this process to train machine learning models, which improve their ability to generate text, images, and other forms of media. By broadening the TDM exception, the government intended to remove barriers that restricted access to vast datasets, theoretically accelerating AI research and development.

Understandably, the proposal faced immediate backlash from the creative industries, which argued it would primarily benefit large tech firms at the expense of creators and media organisations. Writers, journalists, photographers, and artists feared that their copyrighted work could be freely used to train AI systems without any form of credit or compensation. This raised concerns about fairness and the sustainability of creative professions, as AI-generated content, built on unpaid labour, could eventually compete with human creators in the marketplace.

Comparisons were drawn to Section 230 in the United States, which originally aimed to foster social media growth but ultimately enabled platforms to profit from journalistic content without paying for it. Lord Black of Brentwood warned that such changes could devastate an already struggling media sector, where advertising revenue has shifted from publishers to tech giants like Google.

In response to widespread criticism, the government withdrew the proposal in early 2023. However, the issue resurfaced last month 2025 with the publication of the ‘AI Opportunities Action Plan,’ which recommended reforms to make the UK’s TDM regime more competitive. This reignited concerns among authors, artists, and journalists, who feared that AI firms could exploit their work without compensation.

The House of Lords intervened, voting 145 to 126 in favour of amendments to the Data (Use and Access) Bill, requiring AI companies operating in the UK to respect existing copyright laws. These amendments, led by Baroness Kidron, underscored the need to protect creative works from unauthorised AI training. Matt Rogerson, head of policy at The Financial Times, called the government’s earlier proposal a “huge mistake”, reinforcing the view that copyright laws must adapt to protect content creators.

Against this backdrop, NewsX Community Interest Company (CIC), an independent journalism organisation that I founded, has launched legal action against Associated Newspapers, the publisher of Mail Online, over the misuse of journalistic content. NewsX alleges that Mail Online used high-quality images related to the death of Iranian woman Mahsa Amini—originally provided by a Farsi-speaking correspondent—without payment or attribution. Despite the images containing NewsX’s secret proof-of-work mark, Mail Online rejected claims for compensation.

This case highlights a broader issue: large media organisations increasingly rely on syndication agencies that neither employ journalists nor verify content, threatening the integrity and economics of news gathering. NewsX’s exclusive images, including uncropped versions that played a crucial role in global coverage of the Mahsa Amini protests, illustrate why proper credit and compensation matter.

While copyright law establishes ownership, proof-of-work ensures fair payment for editorial labour. If outlets only publish content when licenses are readily available, many critical stories might never be told. The Lords’ amendments to the Data Bill send a strong message that innovation must not come at the cost of human creativity. However, the fight is far from over—the bill will return to the House of Commons for further debate, leaving the future of copyright protections uncertain.

One pressing issue remains: enforcement. Many AI firms train their models in jurisdictions with weaker copyright protections, making it difficult for creators to track unauthorised use. Without swift action, AI companies will have already absorbed vast amounts of copyrighted content, leaving little recourse for journalists and artists. Policymakers must act now to balance technological progress with protecting intellectual property rights, ensuring the UK remains a leader in both innovation and creative excellence.

Michael Leidig is a British journalist based in Austria. He was the editor of Austria Today, and the founder or cofounder of Central European News (CEN), Journalism Without Borders, the media regulator QC, and the freelance journalism initiative the Fourth Estate Alliance respectively. He is the vice chairman for the National Association of Press Agencies and the owner of NewsX. Mike also provided a series of investigations that won the Paul Foot Award in 2006.

Main image: Courtesy ThisIsEngineering/Pexels

RECENT ARTICLES

-

Why Europe’s finance apps must start borrowing from each other’s playbooks

Why Europe’s finance apps must start borrowing from each other’s playbooks -

Why universities must set clear rules for AI use before trust in academia erodes

Why universities must set clear rules for AI use before trust in academia erodes -

The lucky leader: six lessons on why fortune favours some and fails others

The lucky leader: six lessons on why fortune favours some and fails others -

Reckon AI has cracked thinking? Think again

Reckon AI has cracked thinking? Think again -

The new 10 year National Cancer Plan: fewer measures, more heart?

The new 10 year National Cancer Plan: fewer measures, more heart? -

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing -

The legality of tax planning in an age of moral outrage

The legality of tax planning in an age of moral outrage -

The limits of good intentions in public policy

The limits of good intentions in public policy -

Are favouritism and fear holding back Germany’s rearmament?

Are favouritism and fear holding back Germany’s rearmament? -

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth -

Why mere survival is no longer enough for children with brain tumours

Why mere survival is no longer enough for children with brain tumours -

What Germany’s Energiewende teaches Europe about power, risk and reality

What Germany’s Energiewende teaches Europe about power, risk and reality -

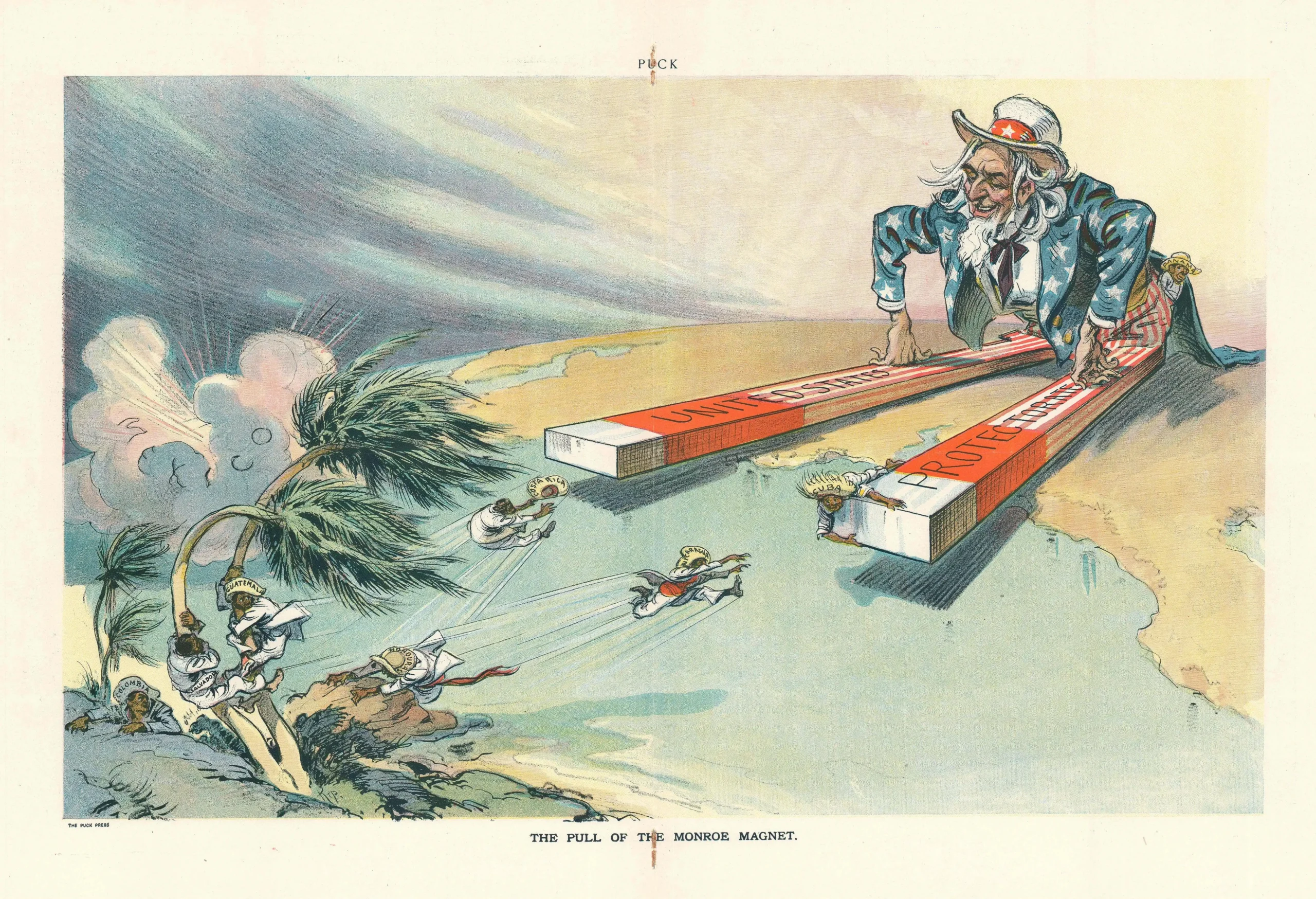

What the Monroe Doctrine actually said — and why Trump is invoking it now

What the Monroe Doctrine actually said — and why Trump is invoking it now -

Love with responsibility: rethinking supply chains this Valentine’s Day

Love with responsibility: rethinking supply chains this Valentine’s Day -

Why the India–EU trade deal matters far beyond diplomacy

Why the India–EU trade deal matters far beyond diplomacy -

Why the countryside is far safer than we think - and why apex predators belong in it

Why the countryside is far safer than we think - and why apex predators belong in it -

What if he falls?

What if he falls? -

Trump reminds Davos that talk still runs the world

Trump reminds Davos that talk still runs the world -

Will Trump’s Davos speech still destroy NATO?

Will Trump’s Davos speech still destroy NATO? -

Philosophers cautioned against formalising human intuition. AI is trying to do exactly that

Philosophers cautioned against formalising human intuition. AI is trying to do exactly that -

Life’s lottery and the economics of poverty

Life’s lottery and the economics of poverty -

On a wing and a prayer: the reality of medical repatriation

On a wing and a prayer: the reality of medical repatriation -

Ai&E: the chatbot ‘GP’ has arrived — and it operates outside the law

Ai&E: the chatbot ‘GP’ has arrived — and it operates outside the law -

Keir Starmer, Wes Streeting and the Government’s silence: disabled people are still waiting

Keir Starmer, Wes Streeting and the Government’s silence: disabled people are still waiting -

The fight for Greenland begins…again

The fight for Greenland begins…again