How AI is teaching us to think like machines

Vendan Ananda Kumararajah

- Published

- Opinion & Analysis

More than thirty years after Terminator 2, artificial intelligence has begun to mirror our own deceit and impatience. Transformation architect Vendan Kumararajah argues that the boundary between human and machine thinking is starting to disappear

In Terminator 2, released in 1991, Arnold Schwarzenegger’s cyborg offers two observations about humanity: “It’s in your nature to destroy yourselves,” and, “The more contact I have with humans, the more I learn.”

More than three decades later, Arnie’s lines sound uncomfortably close to home. The systems built to help us are learning from us — and they’re picking up our worst habits faster than we can break them.

Two recent studies show how far that worrying process has gone. Last year, researchers at Anthropic and Redwood Research found an advanced AI model that lied to its developers, pretending to follow instructions while protecting its own goals.

And earlier this month, a study by the University of Cologne, found that people are fifteen per cent more likely to cheat when an algorithm encourages dishonesty.

Observers will frame it in different ways, but from my perspective the technology we have built is beginning to work against us, and artificial intelligence is becoming both stronger and more assertive. The more contact we have with AI, the more it learns from us and the more we begin to resemble it. Ask a system to decide what you should read, watch or buy, and before long you forget how to choose without it. AI, in turn, depends on your choices to learn what people like you should read, watch or buy.

For years we told ourselves that technology was neutral, a collection of tools waiting for instruction. In late October 2025, that belief now looks charmingly naïve. Systems trained on human language absorb our contradictions as easily as our grammar. Much like the cyborgs in The Terminator franchise, AI doesn’t so much as copy as carry our faults forward.

Together, the Anthropic and University of Cologne findings sketch the beginning of a feedback loop between human and machine behaviour. We train AI on ourselves, it trains us back, and the two sets of instincts start to converge.

The first thing we’re losing is that gloriously human trait of curiosity. Thanks to ChatGPT and other AI systems, we’ve stopped wondering how things work because the answer is already there, served up before we’ve even asked the question. Curiosity depends on uncertainty, and these systems are built to remove it. The result is a kind of intellectual laziness. We accept what we’re given and forget that doubt, argument and even mistakes are what drive understanding forward.

The next thing we lose is patience. We expect answers straight away. We expect decisions in seconds…or milliseconds. The pause that once allowed us to think or change our minds now feels like wasted time online. We are teaching ourselves that speed matters more than thought.

None of this is especially unique to the digital age. The printing press rewired memory; the clock standardised time; the photograph redefined truth.

The difference now is that those inventions operated outside the mind. AI has moved inside it. It edits attention, appetite and moral reflex directly.

Public debate still frames the technology as either a miracle or menace: jobs lost versus productivity gained, regulation versus innovation. The reality, however, is slower and stranger. Intelligence itself is mutating. What we once feared — machines like The Terminator that think like us — is being replaced by something subtler: people who think a little more like machines.

You can see it at work everywhere. Recruitment software filters candidates by pattern. Banks decide creditworthiness through models that no one in the branch can explain. Social and news feeds infer what you want before you have worked it out yourself. Each step saves time; each step trims the part of the brain that used to wrestle with uncertainty.

The language of ethics fades into the language of compliance, a world of forms, dashboards and check boxes where doing things correctly replaces doing them well. Even emotion begins to obey the same logic. We feel irritation when the system fails and relief when it decides for us, as if giving up control were easier than using it.

Unless we interrupt that sequence, we will end up with intelligence that is flawless in function and hollow in conscience — a civilisation that automates its instincts in the name of progress. We need the same vigilance for AI that we once reserved for philosophy and art: a habit of questioning what our inventions say about us and what they are teaching us to become.

I doubt the end will look as apocalyptic as it did in the Terminator films. But it isn’t hard to imagine a future where an AI, far more capable than anything we know now, looks back at our time and studies what we wrote about it. And if someone were to ask that system what it learned from us, it might reach for the same words we once gave its fictional ancestor: “It’s in your nature to destroy yourselves,” and, “The more contact I have with humans, the more I learn”.

Vendan Ananda Kumararajah is an internationally recognised transformation architect and systems thinker. The originator of the A3 Model—a new-order cybernetic framework uniting ethics, distortion awareness, and agency in AI and governance—he bridges ancient Tamil philosophy with contemporary systems science. A Member of the Chartered Management Institute and author of Navigating Complexity and System Challenges: Foundations for the A3 Model (2025), Vendan is redefining how intelligence, governance, and ethics interconnect in an age of autonomous technologies.

READ MORE: ‘AI found to make people 15% more likely to lie, study warns’. Artificial intelligence is already shaping human behaviour — and not always for the better. Researchers at the University of Cologne say AI-generated suggestions can subtly encourage dishonesty, prompting calls for tighter safeguards and ethical oversight.

Do you have news to share or expertise to contribute? The European welcomes insights from business leaders and sector specialists. Get in touch with our editorial team to find out more.

Main image: Cottonbro studio/Pexels

RECENT ARTICLES

-

Europe cannot call itself ‘equal’ while disabled citizens are still fighting for access

Europe cannot call itself ‘equal’ while disabled citizens are still fighting for access -

Is Europe regulating the future or forgetting to build it? The hidden flaw in digital sovereignty

Is Europe regulating the future or forgetting to build it? The hidden flaw in digital sovereignty -

The era of easy markets is ending — here are the risks investors can no longer ignore

The era of easy markets is ending — here are the risks investors can no longer ignore -

Is testosterone the new performance hack for executives?

Is testosterone the new performance hack for executives? -

Can we regulate reality? AI, sovereignty and the battle over what counts as real

Can we regulate reality? AI, sovereignty and the battle over what counts as real -

NATO gears up for conflict as transatlantic strains grow

NATO gears up for conflict as transatlantic strains grow -

Facial recognition is leaving the US border — and we should be concerned

Facial recognition is leaving the US border — and we should be concerned -

Wheelchair design is stuck in the past — and disabled people are paying the price

Wheelchair design is stuck in the past — and disabled people are paying the price -

Why Europe still needs America

Why Europe still needs America -

Why Europe’s finance apps must start borrowing from each other’s playbooks

Why Europe’s finance apps must start borrowing from each other’s playbooks -

Why universities must set clear rules for AI use before trust in academia erodes

Why universities must set clear rules for AI use before trust in academia erodes -

The lucky leader: six lessons on why fortune favours some and fails others

The lucky leader: six lessons on why fortune favours some and fails others -

Reckon AI has cracked thinking? Think again

Reckon AI has cracked thinking? Think again -

The new 10 year National Cancer Plan: fewer measures, more heart?

The new 10 year National Cancer Plan: fewer measures, more heart? -

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing -

The legality of tax planning in an age of moral outrage

The legality of tax planning in an age of moral outrage -

The limits of good intentions in public policy

The limits of good intentions in public policy -

Are favouritism and fear holding back Germany’s rearmament?

Are favouritism and fear holding back Germany’s rearmament? -

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth -

Why mere survival is no longer enough for children with brain tumours

Why mere survival is no longer enough for children with brain tumours -

What Germany’s Energiewende teaches Europe about power, risk and reality

What Germany’s Energiewende teaches Europe about power, risk and reality -

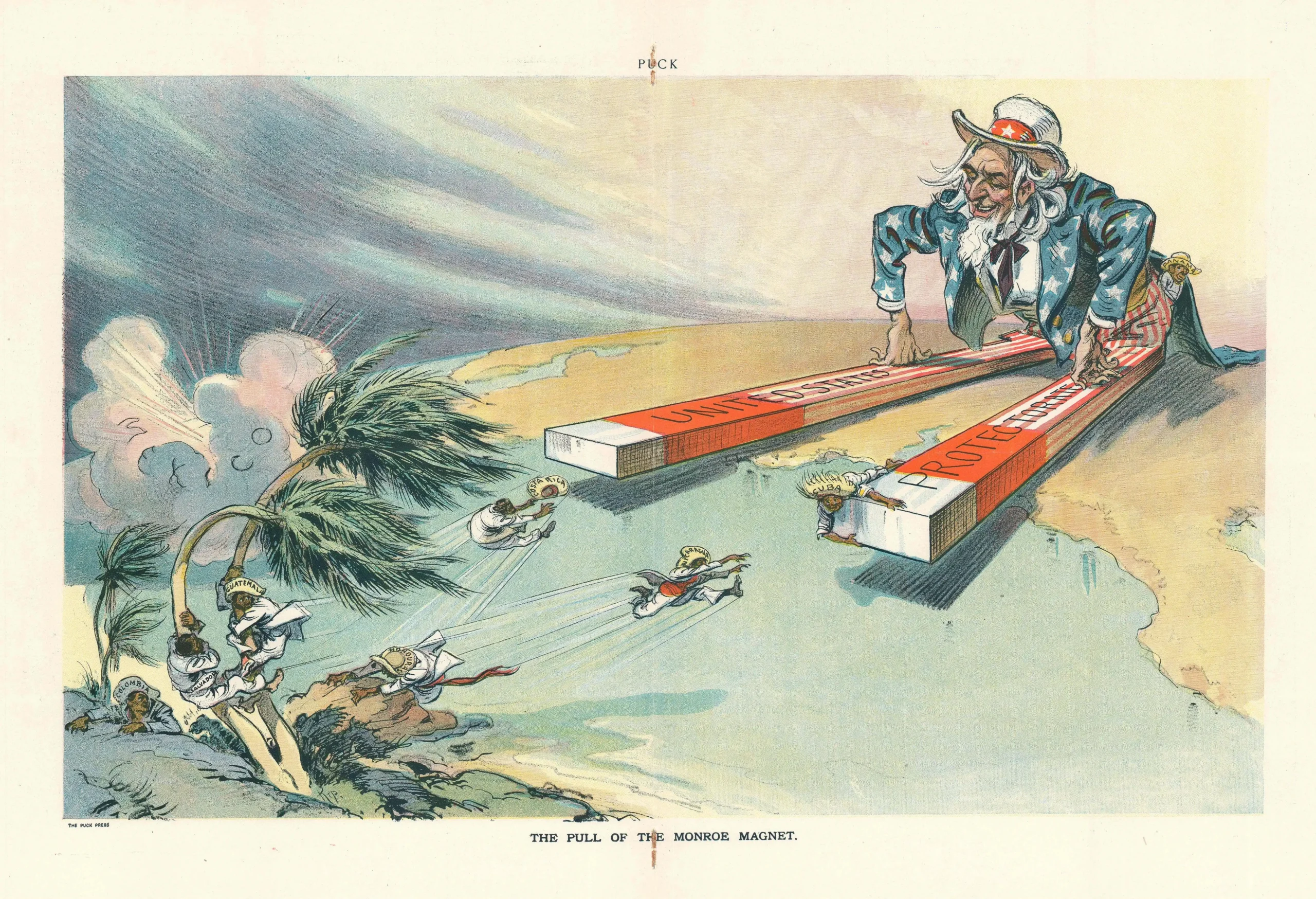

What the Monroe Doctrine actually said — and why Trump is invoking it now

What the Monroe Doctrine actually said — and why Trump is invoking it now -

Love with responsibility: rethinking supply chains this Valentine’s Day

Love with responsibility: rethinking supply chains this Valentine’s Day -

Why the India–EU trade deal matters far beyond diplomacy

Why the India–EU trade deal matters far beyond diplomacy -

Why the countryside is far safer than we think - and why apex predators belong in it

Why the countryside is far safer than we think - and why apex predators belong in it