Balancing the risks and rewards of AI

John E. Kaye

- Published

- Artificial Intelligence, Technology

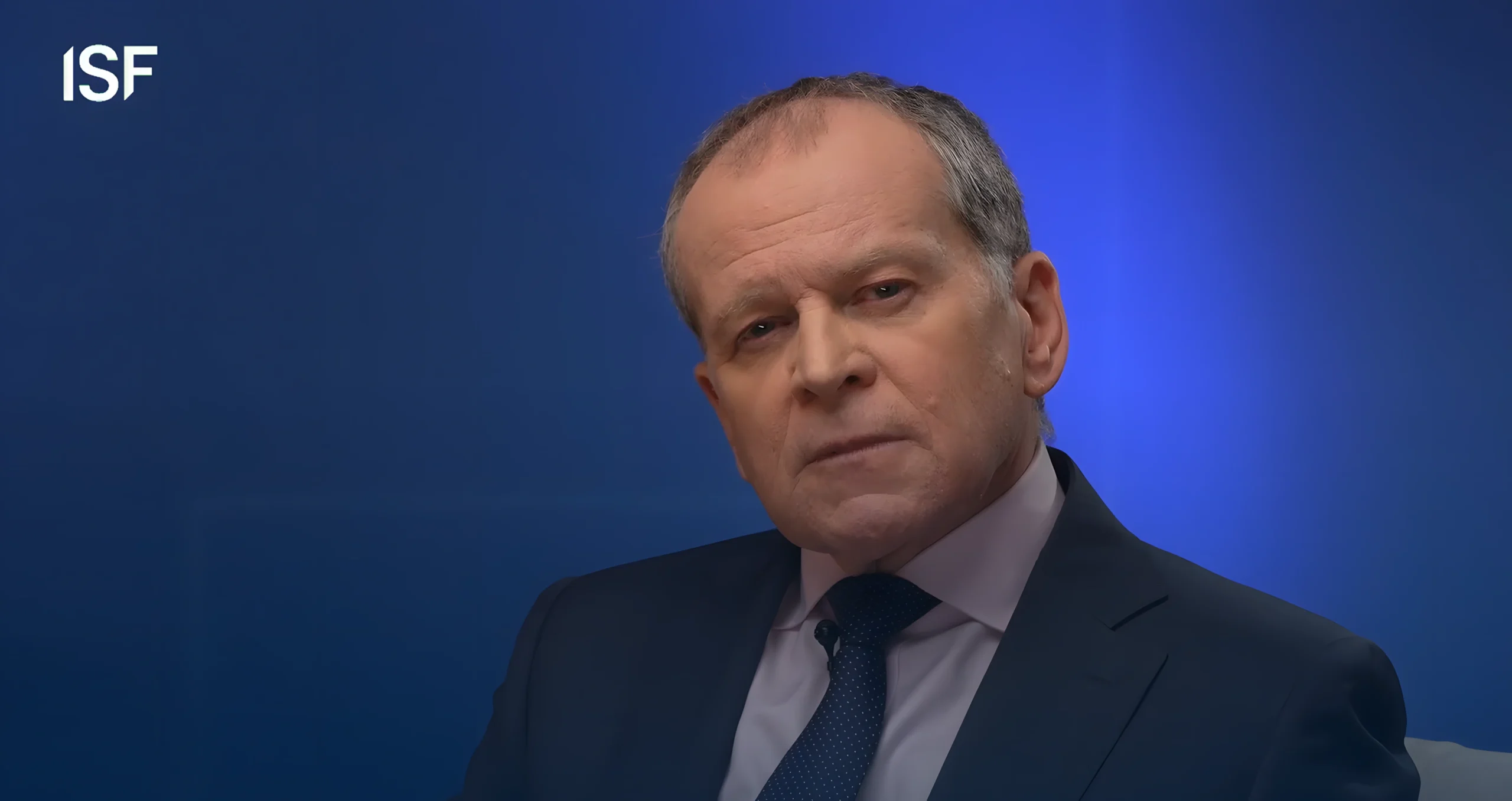

By Steve Durbin, Chief Executive, Information Security Forum

AI holds immense potential in enhancing every business aspect and process, from driving innovation to uncovering hidden business insights, from improving staff productivity to enhancing operational efficiencies. On the other side, AI also poses numerous risks for businesses.

For example, AI systems are trained on large datasets. If these datasets are inherently biased, then the outputs or decisions of these AI systems will be biased. AI also boosts the ability of threat actors to target and automate their attacks. As threat actors learn to harness and manipulate these technologies, it’s possible that organisations may find themselves overwhelmed and under protected against cyberattacks. There are also legal and compliance consequences to consider. If AI systems violate the privacy of individuals, discriminate against them or are unfair to them in any way, then organisations may be subject to fines, penalties and other legal ramifications. Not to mention the loss of customer trust, damage to business reputation and the long-term harm to brand value it may cause.

Bridging the supply chain gap

There’s no doubt that AI is a “hot topic” in boardroom discussions around the world. However, nearly three-quarters of board members still have minimal knowledge or experience with AI technology, which goes to show that there is an urgent need for AI literacy to be elevated in the boardroom. Beyond the bare minimum of AI literacy, there are other best practices board members should consider adopting:

- Embracing AI as Part of Corporate Strategy

AI should not be viewed as a technology or a tool that improves efficiency or reduces employee workloads. Board members must evaluate AI from a business and strategy perspective – understand the business goals, the value it will create, the associated costs, the resource requirements, the limitations and risks prior to setting off on the AI journey. - Building Accountability and Oversight Structures

The question of whether AI oversight is a responsibility for the entire board of directors or can be assigned to the audit committee is still unresolved. This is because some discussions may not be applicable to the entire board. However, it is crucial to establish accountability, oversight, and reporting structures to ensure that board members have the information and control required to make informed decisions and manage AI risks effectively. - Establishing Security as a Core Pillar of the AI Foundation

Security should not be an afterthought – AI systems must be secure by design. This means embedding security measures like access controls, encryption and regular security audits throughout its entire development lifecycle. It means tackling ethical and privacy concerns, implementing bias detection measures, ensuring AI outputs are traceable, transparent and accurate, and having a diverse and inclusive team that oversees all aspects of AI operations. - Fostering a Culture of Transparency and Collaboration

About 41% of American workers feel that AI might take away their jobs. It’s crucial to recognise such emotions and engage the workforce to alleviate their anxiety or fears. Having an open communication and dialogue, providing learning and training resources, extending care and support, etc. – these are ways in which leadership can foster a culture of trust and make employees feel more empowered to use AI.

The AI revolution is definitely underway. However, it is important to avoid rushing into any hasty decisions. By embracing AI as part of business strategy, establishing oversight structures, considering security consequences and implications and understanding its impact on the company culture, organisations can ensure that the usage and adoption of AI is managed in a secure, ethical and responsible manner.

Further information

linkedin.com/in/stevedurbin

RECENT ARTICLES

-

Europe opens NanoIC pilot line to design the computer chips of the 2030s

Europe opens NanoIC pilot line to design the computer chips of the 2030s -

Building the materials of tomorrow one atom at a time: fiction or reality?

Building the materials of tomorrow one atom at a time: fiction or reality? -

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever -

Lasers finally unlock mystery of Charles Darwin’s specimen jars

Lasers finally unlock mystery of Charles Darwin’s specimen jars -

Women, science and the price of integrity

Women, science and the price of integrity -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

A practical playbook for securing mission-critical information

A practical playbook for securing mission-critical information -

Cracking open the black box: why AI-powered cybersecurity still needs human eyes

Cracking open the black box: why AI-powered cybersecurity still needs human eyes -

Tech addiction: the hidden cybersecurity threat

Tech addiction: the hidden cybersecurity threat -

Parliament invites cyber experts to give evidence on new UK cyber security bill

Parliament invites cyber experts to give evidence on new UK cyber security bill -

ISF warns geopolitics will be the defining cybersecurity risk of 2026

ISF warns geopolitics will be the defining cybersecurity risk of 2026 -

AI boom triggers new wave of data-centre investment across Europe

AI boom triggers new wave of data-centre investment across Europe -

Make boards legally liable for cyber attacks, security chief warns

Make boards legally liable for cyber attacks, security chief warns -

AI innovation linked to a shrinking share of income for European workers

AI innovation linked to a shrinking share of income for European workers -

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows -

Surgeons just changed medicine forever using hotel internet connection

Surgeons just changed medicine forever using hotel internet connection -

Curium’s expansion into transformative therapy offers fresh hope against cancer

Curium’s expansion into transformative therapy offers fresh hope against cancer -

What to consider before going all in on AI-driven email security

What to consider before going all in on AI-driven email security -

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California -

The silent deal-killer: why cyber due diligence is non-negotiable in M&As

The silent deal-killer: why cyber due diligence is non-negotiable in M&As -

South African students develop tech concept to tackle hunger using AI and blockchain

South African students develop tech concept to tackle hunger using AI and blockchain -

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year -

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences -

New AI breakthrough promises to end ‘drift’ that costs the world trillions

New AI breakthrough promises to end ‘drift’ that costs the world trillions