How AI agents are supercharging cybercrime

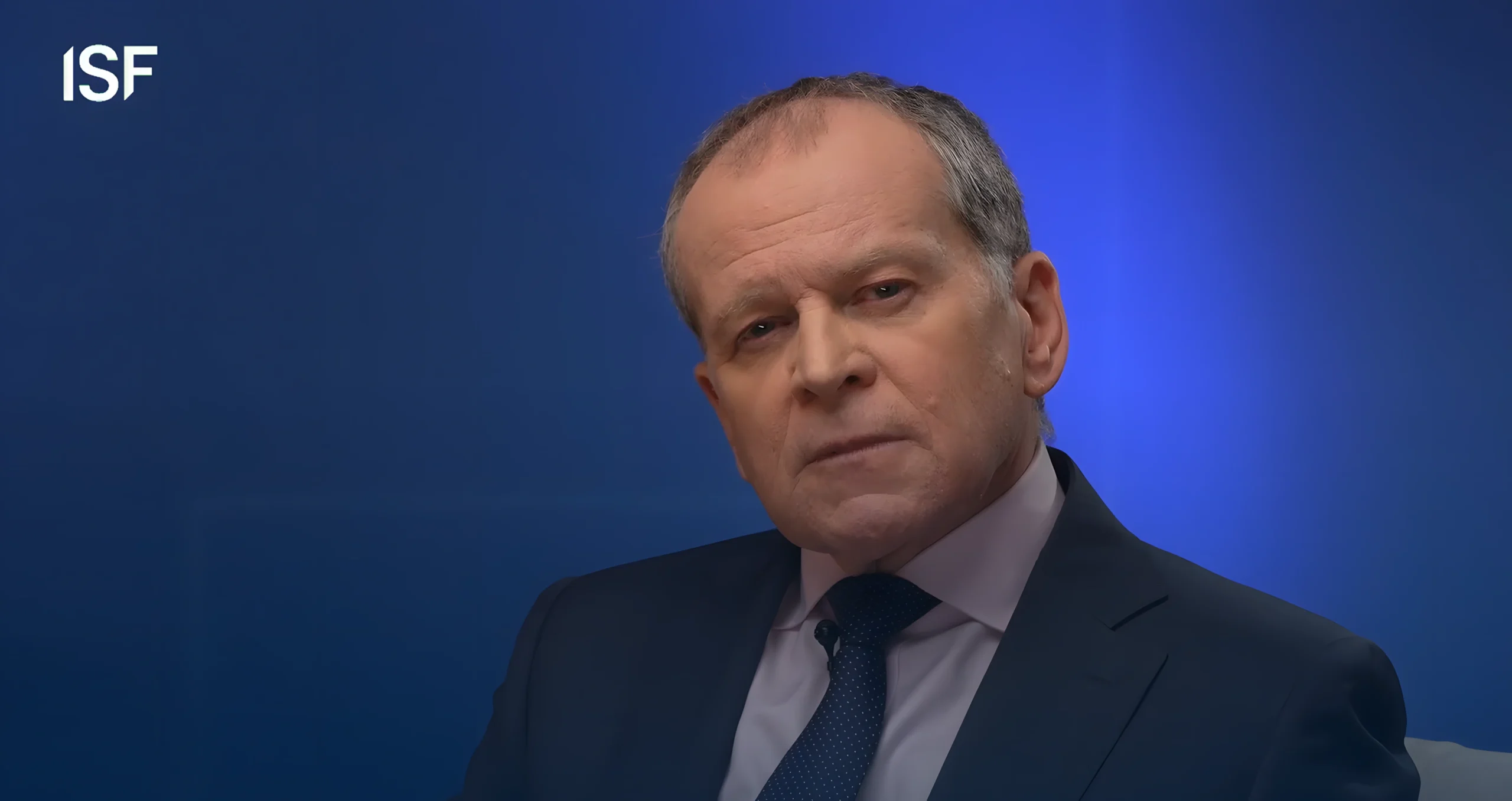

John E. Kaye

- Published

- Cybersecurity, Technology

AI agents capable of acting on their own are being deployed to scan networks, launch attacks, and bypass traditional security systems. Steve Durbin of the Information Security Forum explains how this technology is reshaping the cyber threat landscape — and what organisations need to do to stay ahead

Artificial intelligence agents are systems that can autonomously perform tasks on behalf of users. They can adapt to dynamic environments and make decisions without requiring human intervention. Their ability to perceive and act upon vast datasets autonomously is driving innovations and transforming value chains by optimising processes in sectors such as healthcare, manufacturing, finance and banking. According to a 2024 report, AI agents are expected to be adopted by 82% of organisations by 2027.

Weaponising AI Agents to Automate Cybercrime

The autonomy of AI agents implies advancements when used ethically and responsibly. However, their ability to make decisions independently and their adaptive nature have attracted the interest of malicious actors who can develop a team of agentic AI malware working collaboratively to automate attacks. Such scalable attacks can be executed with unprecedented efficiency and surpass the capabilities of existing threat detection systems. A 2025 executive summary on the state of AI in cybersecurity reports that 78% of CISOs believe AI-powered cyber threats are already significantly impacting their organisations.

Here’s how agentic AI can conceivably automate cyberattacks:

Polymorphic Malware: Like a living chameleon, this AI-generated malicious software can relentlessly change its code or appearance every time it infects a system. This enables it to evade detection by defences that rely on blocklists and static signatures.

Adaptive Malware: AI can automate malware creation that analyses its environment, identifies the security protocols in place, and adapts in real time to launch attacks.

Scalable Attacks: AI’s ability to automate repetitive tasks is exploited by malicious attackers to potentially launch large-scale campaigns that can simultaneously target millions of users with high precision; for example, attacks such as phishing emails, DDoS attacks, and credential harvesting.

Identifying Attackers’ Entry Points: AI systems can autonomously identify vulnerabilities and anomalies by scanning vast networks, finding potential access points. By helping bad actors reduce the time and effort it takes to identify security gaps within a targeted system, AI agents can launch attacks at scale with alarming speed, achieving maximum impact.

Synthetic Identity Fraud: Threat actors exploit AI to create synthetic identities by blending real and fake personal data. Because such synthetic personas can appear legitimate and evade fraud detection, they are commonly used in attacks involving identity theft and social engineering lures.

Personalised Phishing Campaigns: AI amplifies the efficiency of phishing campaigns by scanning and analysing victims’ personal data in public domains. By farming this data, AI can help create highly personalised and convincing phishing emails.

When AI Agents Go Rogue

AI agents use machine learning to continually learn from vast amounts of real-time data and plan their actions. However, unrestricted access to vast amounts of data, along with autonomy, can threaten an organisation’s security and pose regulatory risks when AI agents become rogue and stray from their intended purpose. Rogue AI agents could arise from malicious intent due to deliberate tampering or inadvertently from flawed system design, programming errors, or simply due to user carelessness.

Attackers can manipulate AI’s training data to exploit the autonomy of rogue AI agents through techniques such as:

Direct prompt injection: Attackers give incorrect instructions to manipulate large language models (LLMs) into disclosing sensitive data or executing harmful commands.

Indirect prompt injection: Attackers embed malicious instructions within external data sources such as a website or a document that the AI accesses.

Data poisoning: The training data is poisoned or seeded with incorrect or deceptive information to train the AI model. It undermines the model’s integrity, producing erroneous, biased, or malicious results.

Model manipulation: Attackers intentionally weaken an AI system by injecting vulnerabilities during their training to control its responses, thereby compromising system integrity.

Data exfiltration: Attackers use prompts to manipulate LLMs to expose sensitive data.

Bad actors are using AI to achieve malicious results. In order to tap the true potential of AI, organisations need to consider the potential harm caused by rogue AI while planning their risk management approach to ensure AI is responsibly and safely used.

Defending Against Malicious or Rogue AI Agents

The following can help organisations remain secure from malicious AI agents:

AI-driven threat detection: Use AI-driven monitoring tools to detect the smallest deviations in system activity that may point to unauthorised access or malware.

Data protection tools: To ensure that sensitive data remains secure even if maliciously intercepted, encrypt it. Make sure important data is only accessible by valid users by using multi-factor authentication to minimise the risk of access by unauthorised users.

Resilient AI by adversarial training: To make AI models more resilient against malicious threats, retrain them on past adversarial attack data or subject them to simulated attacks.

Reliable training data: An accurate AI model can be developed by using high-quality training data. Relying on dependable datasets reduces biases and errors, helping to keep the model safe from being trained on malicious data.

Autonomous AI agents can increase efficiency and automate operations. But when they turn rogue, they may pose serious risks due to their ability to act independently and adapt quickly. Although minimally invasive today, risk managers should certainly be aware and on guard. By addressing the security issues native to AI, organisations can fully harness the immense potential AI has to offer.

About the Author

Steve Durbin is Chief Executive of the Information Security Forum, an independent association dedicated to investigating, clarifying, and resolving key issues in information security and risk management by developing best practice methodologies, processes and solutions that meet the business needs of its members. ISF membership comprises the Fortune 500 and Forbes 2000.

Further information:

RECENT ARTICLES

-

Could AI finally mean fewer potholes? Swedish firm expands road-scanning technology across three continents

Could AI finally mean fewer potholes? Swedish firm expands road-scanning technology across three continents -

Government consults on social media ban for under-16s and potential overnight curfews

Government consults on social media ban for under-16s and potential overnight curfews -

Twitter co-founder Jack Dorsey cuts nearly half of Block staff, says AI is changing how the company operates

Twitter co-founder Jack Dorsey cuts nearly half of Block staff, says AI is changing how the company operates -

AI-driven phishing surges 204% as firms face a malicious email every 19 seconds

AI-driven phishing surges 204% as firms face a malicious email every 19 seconds -

Deepfake celebrity ads drive new wave of investment scams

Deepfake celebrity ads drive new wave of investment scams -

Europe eyes Australia-style social media crackdown for children

Europe eyes Australia-style social media crackdown for children -

Europe opens NanoIC pilot line to design the computer chips of the 2030s

Europe opens NanoIC pilot line to design the computer chips of the 2030s -

Building the materials of tomorrow one atom at a time: fiction or reality?

Building the materials of tomorrow one atom at a time: fiction or reality? -

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever -

Lasers finally unlock mystery of Charles Darwin’s specimen jars

Lasers finally unlock mystery of Charles Darwin’s specimen jars -

Women, science and the price of integrity

Women, science and the price of integrity -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

A practical playbook for securing mission-critical information

A practical playbook for securing mission-critical information -

Cracking open the black box: why AI-powered cybersecurity still needs human eyes

Cracking open the black box: why AI-powered cybersecurity still needs human eyes -

Tech addiction: the hidden cybersecurity threat

Tech addiction: the hidden cybersecurity threat -

Parliament invites cyber experts to give evidence on new UK cyber security bill

Parliament invites cyber experts to give evidence on new UK cyber security bill -

ISF warns geopolitics will be the defining cybersecurity risk of 2026

ISF warns geopolitics will be the defining cybersecurity risk of 2026 -

AI boom triggers new wave of data-centre investment across Europe

AI boom triggers new wave of data-centre investment across Europe -

Make boards legally liable for cyber attacks, security chief warns

Make boards legally liable for cyber attacks, security chief warns -

AI innovation linked to a shrinking share of income for European workers

AI innovation linked to a shrinking share of income for European workers -

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows -

Surgeons just changed medicine forever using hotel internet connection

Surgeons just changed medicine forever using hotel internet connection -

Curium’s expansion into transformative therapy offers fresh hope against cancer

Curium’s expansion into transformative therapy offers fresh hope against cancer -

What to consider before going all in on AI-driven email security

What to consider before going all in on AI-driven email security