Bringing the world together on AI

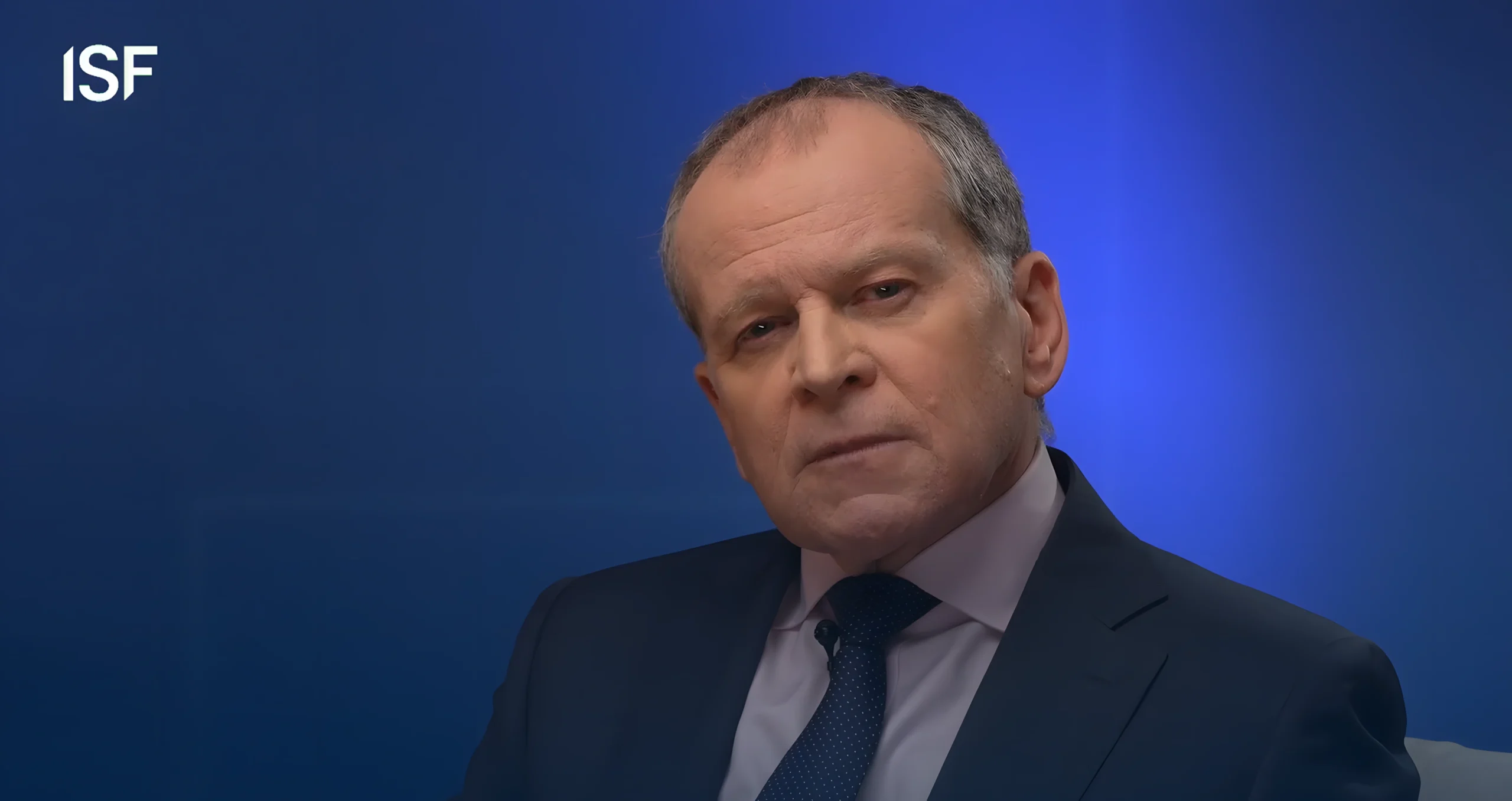

John E. Kaye

- Published

- Artificial Intelligence, Home, Technology

A lack of global cooperation could severely hinder the safe and seamless use of AI, making the need for solutions imperative, says tech expert Mark Minevich

In an era where AI is redefining what’s possible, its rapid evolution is not just a technological marvel but a global phenomenon reshaping everything from healthcare to transportation. This relentless progress presents a paradox: the vast potential of AI is intrinsically linked to our ability to foster unprecedented global cooperation in its development, governance, and ethics.

At a time when AI is becoming a cornerstone of societal advancement, we find ourselves at a crossroads. Nations are beginning to recognise AI’s transformative impact, yet competitive tensions and fragmented approaches threaten to splinter the AI landscape. Bridging these divides isn’t just preferable; it’s a necessity to harness AI’s capabilities equitably across the globe. While the path to global cooperation is strewn with challenges, united action on AI is not just beneficial – it’s imperative for our collective future.

The need for oversight is outpacing policy

Echoing the early days of the internet, the pace of AI innovation is sprinting ahead of regulatory frameworks, plunging us into a new kind of Wild West. In this current state, AI systems, powerful enough to make life-altering decisions in sectors like healthcare, criminal justice, and employment, are being developed and deployed with minimal oversight. Businesses are at the forefront of this AI drive, focusing more on efficiency and scale, while critical aspects such as ethics, bias, and transparency remain under-examined. This governance gap leaves society ill-equipped to fully understand, let alone regulate, AI’s societal impacts.

This disjointed approach to AI regulation is evident on the global stage. For instance, the European Union is pioneering risk-based regulations on AI, including prohibitions on high-risk practices like social scoring, in stark contrast to the United States’ preference for industry self-regulation. Such divergent strategies risk fragmenting the AI landscape, affecting not just national policies but the operations of technology companies worldwide.

The urgency for coherent governance and policy alignment cannot be overstated. As AI continues to weave itself into the fabric of our daily lives, the current regulatory patchwork fails to provide the comprehensive oversight needed. The task ahead is clear: to close these governance gaps and synchronise norms across national borders.

Rapid advancement vs. lagging global governance

Artificial intelligence is revolutionising sectors from healthcare to transportation at an unprecedented pace. Yet, its full potential hinges on global cooperation in development, governance, and ethics. As national governments, including those in Europe, grapple with AI’s implications, competitive tensions and disjointed efforts threaten to fragment the AI landscape. Bridging these divides is crucial for equitable AI development worldwide.

Building a unified global AI roadmap

As we’ve touched upon, AI’s rapid innovation has eclipsed governance frameworks, creating a regulatory Wild West. Corporations lead AI advancements with scant oversight, while governments, including European nations, scramble to provide regulation. And to reiterate, the EU’s proposed risk-based AI regulations contrasts sharply with the US’s preference for industry self-regulation, underlining the need for unified global governance to effectively address AI’s societal impacts.

The intense technology competition between China and the United States poses a significant challenge to global AI cooperation. However, Europe’s role in this dynamic is crucial and unique. As a potential mediator, Europe can influence and contribute to a more balanced global AI ecosystem, avoiding the bifurcation of AI technologies along political lines.

To counteract unilateralism and fragmentation in AI, it is essential to foster inclusive global cooperation. Initiatives like the AI for Good Global Summit demonstrate the importance of multi-stakeholder collaboration. Additionally, formulating international AI safety standards and mainstreaming ethics through multilateral accords are imperative. With its strong emphasis on data privacy and human rights, Europe can play a pivotal role in shaping these global standards.

Four focus areas can help catalyse the cooperation that AI so badly needs on issues of ethics, standards, and priorities:

- Facilitating multi-stakeholder collaboration International organisations have a vital role to play in convening platforms and initiatives where businesses, academic experts, civil society groups, and governments can collaborate on framework agreements. The annual AI for Good Global Summit convened by the ITU United Nations agency is one example of bringing together diverse interests to build common ground. More such avenues are needed for transparent dialogue to develop shared priorities that benefit all of humanity.

- Formulating international AI safety standards Global bodies consisting of technical experts from industry and academia should formulate mechanisms like safety certifications, regulations, and best practices for high-risk areas of AI applications such as autonomous vehicles and healthcare systems. While voluntary, such standards can steer industries toward safer norms and prevent a race to the bottom caused by unilateral approaches. The Montreal Protocol’s success in aligning nations to phase out ozone-depleting CFCs demonstrates the potential of such standards to effect change.

- Mainstreaming ethics through multilateral accords – Norm-setting bodies and intergovernmental institutions have a key role to play in promoting ethically aligned principles for AI development and deployment. The OECD and multiple UN agencies have already proposed shared values and guidelines around human rights, preventing bias, transparency, and accountability. More nations must enshrine these principles within national policies and establish oversight mechanisms to nurture shared values. External audits by civil society can also expose and shame violations.

- Inclusive technology transfer and capacity building – Developed nations should assist emerging economies via skills training, educational exchanges, and technology transfers to build their capacity for responsible AI innovation. This will expand the common stake in rule-based global cooperation by preventing a destabilising AI divide between the developed and developing world. It can also provide competitive commercial alternatives to Chinese AI technologies that skirt ethics and human rights considerations.

Moving forward

Realising AI’s vast potential demands a concerted effort from governments, corporations, and civil society to align global standards and priorities. This collaboration must prioritise human rights and ethics over mere efficiency. With its unique position and values, Europe can significantly contribute to steering AI’s global development towards a more inclusive and ethical direction.

About the Author

Mark Minevich is the author of the newly released award-winning book, ‘Our Planet Powered by AI’. He is an AI strategist, investor, UN Advisor, and advocate with expertise in AI.

RECENT ARTICLES

-

Europe opens NanoIC pilot line to design the computer chips of the 2030s

Europe opens NanoIC pilot line to design the computer chips of the 2030s -

Building the materials of tomorrow one atom at a time: fiction or reality?

Building the materials of tomorrow one atom at a time: fiction or reality? -

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever -

Lasers finally unlock mystery of Charles Darwin’s specimen jars

Lasers finally unlock mystery of Charles Darwin’s specimen jars -

Women, science and the price of integrity

Women, science and the price of integrity -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

A practical playbook for securing mission-critical information

A practical playbook for securing mission-critical information -

Cracking open the black box: why AI-powered cybersecurity still needs human eyes

Cracking open the black box: why AI-powered cybersecurity still needs human eyes -

Tech addiction: the hidden cybersecurity threat

Tech addiction: the hidden cybersecurity threat -

Parliament invites cyber experts to give evidence on new UK cyber security bill

Parliament invites cyber experts to give evidence on new UK cyber security bill -

ISF warns geopolitics will be the defining cybersecurity risk of 2026

ISF warns geopolitics will be the defining cybersecurity risk of 2026 -

AI boom triggers new wave of data-centre investment across Europe

AI boom triggers new wave of data-centre investment across Europe -

Make boards legally liable for cyber attacks, security chief warns

Make boards legally liable for cyber attacks, security chief warns -

AI innovation linked to a shrinking share of income for European workers

AI innovation linked to a shrinking share of income for European workers -

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows -

Surgeons just changed medicine forever using hotel internet connection

Surgeons just changed medicine forever using hotel internet connection -

Curium’s expansion into transformative therapy offers fresh hope against cancer

Curium’s expansion into transformative therapy offers fresh hope against cancer -

What to consider before going all in on AI-driven email security

What to consider before going all in on AI-driven email security -

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California -

The silent deal-killer: why cyber due diligence is non-negotiable in M&As

The silent deal-killer: why cyber due diligence is non-negotiable in M&As -

South African students develop tech concept to tackle hunger using AI and blockchain

South African students develop tech concept to tackle hunger using AI and blockchain -

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year -

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences -

New AI breakthrough promises to end ‘drift’ that costs the world trillions

New AI breakthrough promises to end ‘drift’ that costs the world trillions