Spotlight on Europe’s AI regulation

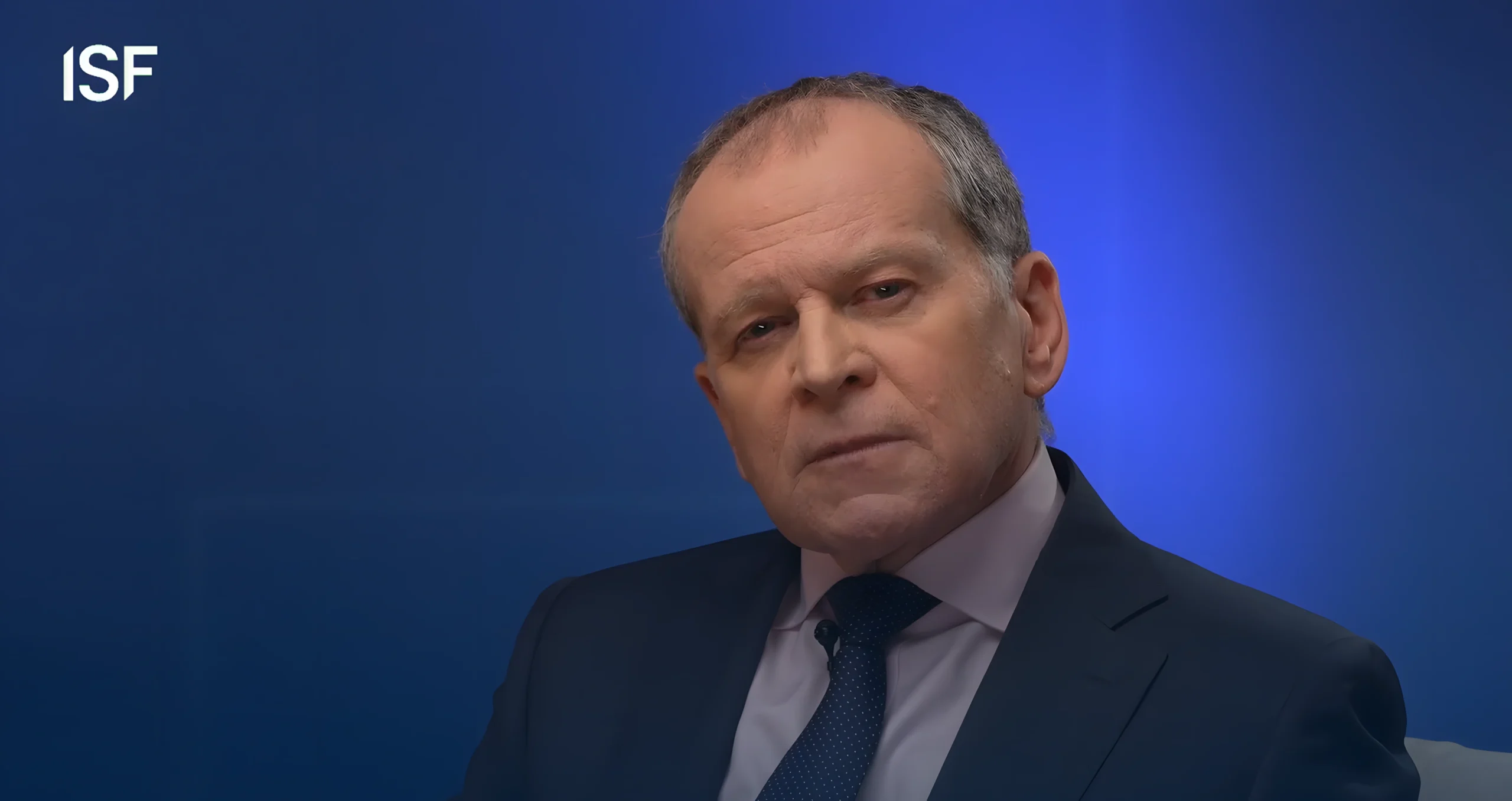

John E. Kaye

- Published

- Home, Technology

CEO of Mind Foundry Brian Mullins checks over the EU’s legal framework for AI – where they got it right, and where there is work to be done

There are many words that find their way into conversations about regulation: “stifling”, “responsible”, “protective”, and “obfuscate” are just some of them. However, regulation, consumer protection, and innovation shouldn’t be at odds with each other. Regulation of high-impact technologies is inevitable, and desirable, but if regulators don’t fully understand these technologies, companies will continue to innovate around regulation.

Involving a range of stakeholders from the outset – comprising governments, commercial organisations, academics, and experts – can help address this knowledge gap. I was pleased to see this a priority for the European Commission, who approached more than 1,000 stakeholders to help shape the first ever legal framework on AI. This cross-sector collaboration certainly illuminated some of the most important issues surrounding AI today, and over the course of this article I’ll look at first where the Commission got it right, and later, where there is still work to do.

Turning a corner on transparency ,and a focus on skills and learning

It was no surprise to see the regulation focus on transparency, particularly in the context of high-risk applications. AI systems that are used to detect emotions or categorise people based on their characteristics will first have to tell people that they are interacting with AI, and then provide thorough documentation about the use of the system to regulators. The assumption that AI is too complex to understand is dangerous – if we don’t understand the technologies and their potential risks, then it leaves room to absolve ourselves of responsibility when things go wrong.

AI is described in the legislation as software developed using machine-learning approaches, logic- and knowledge-based approaches, or statistical approaches. This enables humans to understand how the machine is learning, but – most importantly – to challenge, mentor, and intervene as appropriate. Explainability must not stop with the people that create AI – it needs to extend to users so they can make better decisions. For example, if an AI system is disregarding candidates without a university qualification, humans can step in to reshape the rule.

Essentially, AI will not be truly explainable unless the individuals whose lives are affected – and who are unlikely to have a technical understanding of AI – are also able to understand how decisions are made.

Linked closely to transparency is the emphasis on skills. Recent data on the AI skills gap found that 93% of organisations in the UK and US consider AI to be a business priority, but more than half (51%) don’t have the right mix of AI talent to bring their strategies to life. Businesses and governments globally are increasing their efforts to upskill the future workforce – having parts of this effort mandated in law will provide the incentive and support to help close the skills gap.

AI is already the norm in so many industries, and it has huge potential to develop more complex skills and knowledge about the external world. With potential comes risk, so it’s encouraging to see continuous post-deployment learning by AI systems called out as something requiring tighter regulation. It is imperative that AI companies identify how their solutions will evolve, and provide guidance so they remain within the law, while also prioritising innovation.

The impact of design

I applaud the call for a set of standard mandatory requirements applying to the design and development of high-risk AI systems before they enter the market. In most cases, AI is not a standalone product but a feature of existing products, and this poses a real risk. If we get caught up on the impact of AI-driven products – some of which are far less harmful than others, for example ™囡 smart assistants versus mass surveillance technologies – we risk missing the potentially harmful systemic impact of the AI component. This could be anything from learned racism to AI’s environmental impact. Whether related to bias or climate change, AI must be developed and measured on its system-wide impact to ensure it works for the good of everyone.

Now, let’s have a look at where there is more work for the European Commission to do.

Systemic impact: Who’s responsible?

The focus on design goes some way to addressing the system-wide impact of AI solutions, but we need more clarity on everything from how a decision affects someone’s life to how the data itself is a product of the power structures embedded in society.

AI might play a small part in a largely human or non-AI decisioning system, or it may represent the majority of the decision-making. The difference between these two poles is vast, and this makes for a slippery slope. The only way AI can be implemented safely is if its impact is measured systemically. In the same way that GDPR has been developed to respect the fundamental rights of the individual, any AI regulation must respect the fundamental rights of both individual and population.

Systemic impact should not divert attention away from individual responsibility. The regulation places a focus on commercial companies who would be heavily fined for bad practice, but this leaves questions about where the responsibility lies for others in the ecosystem. Would breaches of the regulation fall at the feet of AI manufacturers or the companies implementing the AI systems? The legislation is also missing individual recourse, relying instead on the protections in GDPR around data. This will not protect individuals from irresponsible actions during the model-making or system design process.

A final point of contention is the clause in the EU regulation that states applications “authorised by law” would be exempt from rules around transparency. This empowers individual states to decide what is classified lawful, and could be easily abused by corrupt governments. Recent reports about the use of emotion-detection AI by police in nations outside the EU to determine detainees’ innocence or guilt are troubling indicators of the direction AI development could go in, if left unregulated.

High-quality datasets – do they even exist?

The short answer is no, despite the regulation stating the requirement for “high-quality datasets” with regards to high-risk AI systems. There is no widely accepted technical definition of “high-quality”, and without a universally recognised definition the law becomes tricky to understand and enforce.

Moreover, it is impossible to remove bias from existing data sets – even if, for example, gender was removed, it would still be easy to identify based on other criteria such as salary or age. AI is proficient at finding patterns in existing data sets and presenting back truths about the world. We should hone in on problematic data and address the issues at their core, rather than bask in the false sense of security that abstract definitions provide.

The regulation reinforces the importance of “human oversight”, but is this the most valuable role a human can play? Methods of human oversight generally require human intervention at two key points: when the AI system doesn’t know how to complete a task, or when the human deems it appropriate to step in. While logical in theory, humans are imperfect and therefore these interventions are imperfect too. Humans may be acting on their own biases and reinforcing them into the AI systems, or their interventions may be too slow and easily routed around.

Humans can have a more powerful role through Human/AI collaboration. This involves developing the AI so it learns when it requires human guidance, how to operate without human input, and how to communicate with other agents. This creates a much more intuitive and efficient relationship.

Where do we go from here?

The EU’s AI regulation will not only have a significant impact on the countries it governs, but also globally on AI creators and its users. It is commendable that the EU is attempting to set a gold standard for the responsible development of AI, but we must keep one eye firmly on innovation, particularly in the UK.

The UK is the third-most important country in the world for developing AI, after the United States and China. However, that place on the global stage is not guaranteed, and we need to think about how we continue to innovate best-in-class technology while deploying responsible regulatory frameworks. Time will tell whether the UK can successfully strike the balance, and whether it can use the EU’s regulations as a springboard for its own governance framework in a way that secures its place as the world’s global centre for responsible, state-of-the-art artificial intelligence.

Further information

RECENT ARTICLES

-

Europe opens NanoIC pilot line to design the computer chips of the 2030s

Europe opens NanoIC pilot line to design the computer chips of the 2030s -

Building the materials of tomorrow one atom at a time: fiction or reality?

Building the materials of tomorrow one atom at a time: fiction or reality? -

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever -

Lasers finally unlock mystery of Charles Darwin’s specimen jars

Lasers finally unlock mystery of Charles Darwin’s specimen jars -

Women, science and the price of integrity

Women, science and the price of integrity -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

A practical playbook for securing mission-critical information

A practical playbook for securing mission-critical information -

Cracking open the black box: why AI-powered cybersecurity still needs human eyes

Cracking open the black box: why AI-powered cybersecurity still needs human eyes -

Tech addiction: the hidden cybersecurity threat

Tech addiction: the hidden cybersecurity threat -

Parliament invites cyber experts to give evidence on new UK cyber security bill

Parliament invites cyber experts to give evidence on new UK cyber security bill -

ISF warns geopolitics will be the defining cybersecurity risk of 2026

ISF warns geopolitics will be the defining cybersecurity risk of 2026 -

AI boom triggers new wave of data-centre investment across Europe

AI boom triggers new wave of data-centre investment across Europe -

Make boards legally liable for cyber attacks, security chief warns

Make boards legally liable for cyber attacks, security chief warns -

AI innovation linked to a shrinking share of income for European workers

AI innovation linked to a shrinking share of income for European workers -

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows -

Surgeons just changed medicine forever using hotel internet connection

Surgeons just changed medicine forever using hotel internet connection -

Curium’s expansion into transformative therapy offers fresh hope against cancer

Curium’s expansion into transformative therapy offers fresh hope against cancer -

What to consider before going all in on AI-driven email security

What to consider before going all in on AI-driven email security -

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California -

The silent deal-killer: why cyber due diligence is non-negotiable in M&As

The silent deal-killer: why cyber due diligence is non-negotiable in M&As -

South African students develop tech concept to tackle hunger using AI and blockchain

South African students develop tech concept to tackle hunger using AI and blockchain -

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year -

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences -

New AI breakthrough promises to end ‘drift’ that costs the world trillions

New AI breakthrough promises to end ‘drift’ that costs the world trillions