AI is powering the most convincing scams you’ve ever seen

John E. Kaye

- Published

- Cybersecurity, Technology

Phishing attacks are evolving fast, using automation, personalisation and speed to outmanoeuvre traditional defences. Josh Bartolomie, Chief Security Officer and VP of Global Threat Services at Cofense, sets out how cybercriminals are applying AI to sharpen their tactics and what organisations must do to respond

The rapid evolution of artificial intelligence (AI) is transforming industries and reshaping the cybersecurity landscape, especially when it comes to phishing attacks. While AI introduces significant opportunities for innovation and automation, it also presents grave risks. Cybercriminals are increasingly leveraging AI to launch sophisticated phishing campaigns and malware attacks with a much higher frequency and diversification, which have a higher potential to bypass traditional security measures, creating a greater risk for organisations around the globe.

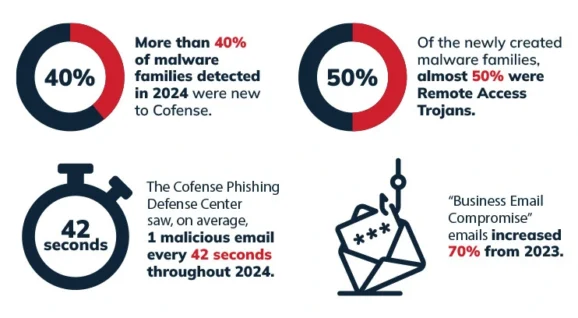

Email continues to be the most exploited channel for cyber threats due to its ubiquity in business communications. In 2024, Cofense identified a malicious email every 42 seconds. The most significant growth in reported malicious emails was in the construction industry (a 1,282% increase), followed by education (341%) and tax-related campaigns (340%). AI’s influence has enabled attackers to craft more convincing, iterative, and frequent attacks, making multi-faceted phishing defence more critical than ever.

Against this backdrop, several clear trends have emerged that show how AI is being used to sharpen existing scams and to create entirely new ways of deceiving and exploiting. These developments point to a step-change in both the scale and complexity of phishing threats.

1. AI Accelerates Malware Development

Generative AI has streamlined the creation of new malware, reducing the expertise required to produce dangerous software. In 2024, nearly half of the new malware families identified were Remote Access Trojans (RATs), which allow attackers to control infected systems remotely. AI helps automate malware generation to better evade detection by instrumenting their iteration and lessons learned into models for real-time evolution to ensure they can continually bypass email and organisational security tools.

2. Generative AI Enables Precision Targeting

Threat actors use generative AI to create highly personalised and convincing phishing content. By scraping publicly available data, such as job titles and social media posts, attackers craft messages that resonate with specific individuals. The realism of these messages, combined with natural language generation, makes them especially effective at deceiving targets.

This automation has enabled threat actors to scale their campaigns relatively effortlessly. This has led to a noticeable rise in business email compromise (BEC) schemes, including convincing invoice fraud using spoofed email chains and fake executive approvals.

3. AI Boosts Business Email Compromise (BEC)

BEC attacks surged 70% from 2023 to 2024, partly due to AI’s ability to automate, personalise, and adapt email content dynamically. One prevalent scam that Cofense identified involved fake invoice requests appearing to be approved by senior executives, exploiting social trust within organisations. Often, these messages originated from seemingly legitimate domains tricking employees into taking financially harmful actions.

4. Polymorphic Phishing Escalates

Polymorphic phishing attacks, an advanced form of phishing attack where cybercriminals continuously change or ‘morph’ the content, structure, or appearance of their malicious emails to evade detection by security systems, are on the rise. Unlike static phishing methods, these attacks dynamically alter subject lines, sender details, and message formats. This adaptability makes it hard to detect using traditional security systems.

While AI can aid in identifying certain types of anomalies in email patterns, human analysts are essential due to the level of diversification and continued advancement of phishing threat content. Their ability to provide contextual understanding complements AI tools, enabling better threat identification and response. As noted by Cofense experts, successful defence against polymorphic threats requires the synergy of machine and human intelligence.

5. Diversified Phishing Tactics

Phishing campaigns are no longer limited to a few recurring themes or targets. Attackers now have a wide range of diverse narratives, emotional triggers, and technical disguises to increase their success rates. Examples include fake tax offers, urgent security alerts, disaster-related charity appeals, and counterfeit communications from trusted brands. For example, Microsoft was the most frequently spoofed brand in 2024.

This diversification erodes the effectiveness of pattern-based detection tools and heightens the need for sophisticated, adaptive security measures.

Quantifying the AI-Driven Threat Landscape

Recent data paints a stark picture of just how rapidly the threat landscape is evolving. The figures below, drawn from Cofense’s latest threat intelligence, show the scale and speed at which phishing tactics are advancing — and the growing sophistication of the tools behind them:

- Over 40% of malware families detected in 2024 were new to Cofense.

- 50% of new malware strains were RATs.

- Tax-themed phishing campaigns rose by 340%.

- Use of legitimate files in phishing increased by 575%, showing how attackers blend malicious content with trusted file types to avoid detection.

- Campaigns using steganography (hiding malicious code within images or files) also rose by 37%, showcasing the increasing complexity of attack methods.

Together, these figures reflect a broader shift in both the scale and complexity of phishing attacks. They also hint at what’s to come, as cybercriminals continue to refine their methods and exploit the growing capabilities of AI. As the technology advances, the cost and complexity of launching high-quality phishing attacks are falling — making it easier for threat actors to produce convincing scams at scale.

Deepfakes and realistic impersonations—via video, audio, and written content—are likely to rise, increasing the potential for data breaches and financial fraud. Deloitte forecasts a 32% increase in losses from AI-enhanced frauds, totaling over $40 billion annually by 2027.

The Proactive Defence Strategy

In a landscape where AI enables threats to be ever more sophisticated, a proactive, layered security model needs to be adopted. It should merge advanced AI threat detection with human oversight to deliver not only speed but also accurate threat detection. By leveraging real-time and actionable phishing threat intelligence, organisations can detect, block, or swiftly identify many AI-enhanced attacks, including those that slip past conventional security systems.

Additionally, employee training is a crucial defence layer to mitigate the risk and impact of phishing attacks. Educated employees serve as the first and last line of defence, capable of spotting and reporting suspicious emails before damage is done. This approach creates a security-aware culture that enhances organisational resilience against evolving threats.

“Cybercriminals continue to refine their methods and exploit the growing capabilities of AI” – Cofense

The rise of AI in cybersecurity presents a double-edged sword. While AI enhances defence tools and processes, it equally empowers cybercriminals with unprecedented scale and capabilities. Phishing attacks are becoming more dynamic, personalised, and difficult to detect, particularly with polymorphic and generative AI-driven methods. Organisations must adapt by integrating AI with human intelligence, expanding threat visibility, and training staff to recognise and report malicious activity. With a forward-looking approach, companies can stay ahead of adversaries in this ever-changing digital battlefield.

Further information

Produced with support from Cofense. For further information, visit www.cofense.com

Main image: Shutterstock

RECENT ARTICLES

-

Women, science and the price of integrity

Women, science and the price of integrity -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

A practical playbook for securing mission-critical information

A practical playbook for securing mission-critical information -

Cracking open the black box: why AI-powered cybersecurity still needs human eyes

Cracking open the black box: why AI-powered cybersecurity still needs human eyes -

Tech addiction: the hidden cybersecurity threat

Tech addiction: the hidden cybersecurity threat -

Parliament invites cyber experts to give evidence on new UK cyber security bill

Parliament invites cyber experts to give evidence on new UK cyber security bill -

ISF warns geopolitics will be the defining cybersecurity risk of 2026

ISF warns geopolitics will be the defining cybersecurity risk of 2026 -

AI boom triggers new wave of data-centre investment across Europe

AI boom triggers new wave of data-centre investment across Europe -

Make boards legally liable for cyber attacks, security chief warns

Make boards legally liable for cyber attacks, security chief warns -

AI innovation linked to a shrinking share of income for European workers

AI innovation linked to a shrinking share of income for European workers -

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows -

Surgeons just changed medicine forever using hotel internet connection

Surgeons just changed medicine forever using hotel internet connection -

Curium’s expansion into transformative therapy offers fresh hope against cancer

Curium’s expansion into transformative therapy offers fresh hope against cancer -

What to consider before going all in on AI-driven email security

What to consider before going all in on AI-driven email security -

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California -

The silent deal-killer: why cyber due diligence is non-negotiable in M&As

The silent deal-killer: why cyber due diligence is non-negotiable in M&As -

South African students develop tech concept to tackle hunger using AI and blockchain

South African students develop tech concept to tackle hunger using AI and blockchain -

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year -

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences -

New AI breakthrough promises to end ‘drift’ that costs the world trillions

New AI breakthrough promises to end ‘drift’ that costs the world trillions -

Watch: driverless electric lorry makes history with world’s first border crossing

Watch: driverless electric lorry makes history with world’s first border crossing -

UK and U.S unveil landmark tech pact with £250bn investment surge

UK and U.S unveil landmark tech pact with £250bn investment surge -

International Cyber Expo to return to London with global focus on digital security

International Cyber Expo to return to London with global focus on digital security -

Cybersecurity talent crunch drives double-digit pay rises as UK firms count cost of breaches

Cybersecurity talent crunch drives double-digit pay rises as UK firms count cost of breaches