What to consider before going all in on AI-driven email security

Cofense

- Published

- Cybersecurity, Technology

The promise of fully automated, AI-driven email defence is seductive: faster detection, less reliance on resources, and fewer manual tasks. But the promise often obscures a hard truth. Automation alone cannot solve the email threats of today

AI has raised expectations in email security, particularly among organisations facing skill shortages and exploding threat volumes. These next-generation tools promise instant threat removal, using models to infer what ‘looks’ malicious and removing it in real time. Yet vendors’ claims of “catching everything” should raise eyebrows. No perimeter control can detect a missed threat by definition; if it could, it would have blocked it. This false-negative blind spot makes it easy for teams to overestimate coverage. The fact is that these tools are only as good as the data they learn from, and with threats constantly evolving, access to live threat intelligence underpins the success or failure of the tools being used.

Josh Bartolomie, VP of Global Threat Services at Cofense, said: “Despite even mature security programmes using a combination of traditional security controls with modern AI models, we’re still seeing threats reach inboxes. In one instance we saw hundreds of malicious messages in a single month that had to be quarantined post-delivery from a clients’ network after they had bypassed leading tools.”

“Critics may argue that adding humans back into the loop slows down defence. In practice, controlled automation just regulates the speed of everything to optimise accuracy at scale.”

Such examples underscore a key point: AI improves filtering but does not close the gap.

The lack of accuracy in identifying emerging threats is only one aspect. The more consequential challenges are transparency and data control. Many AI phishing defences operate as opaque systems whose decision-making is difficult to explain, audit, or contest. For regulated industries – financial services, healthcare, critical infrastructure – that lack of visibility is more than a technical nuisance; it is a governance and compliance risk. When a regulator, auditor, or customer asks, “Why was this message allowed or removed?”, the response, “Because the model said so.” is not an acceptable answer.

This opacity is tied to how many AI systems function. Techniques such as social graph analysis infer normal communication patterns across users and groups, then flag deviations as suspicious. While effective in some contexts, this approach requires large-scale collection and processing of user data: who communicates with whom, how often, at what times, on which topics. This is sensitive data that potentially implicates privacy, user consent, data sovereignty, and cross-border policies.

Organisations should scrutinise AI tooling through a data protection lens: What user data does the system ingest? Is it anonymised or aggregated? Where is it stored and processed? Who can access it? How long is it retained? Each answer exposes vital governance gaps, from GDPR to sector-specific mandates like HIPAA, GLBA, or SOX. Organisations can lose the visibility into how that data is handled, and poorly managed AI can inadvertently leak sensitive metadata, create profiling risks, and undermine user trust. This means keeping audit trails, having clear data-processing agreements, defining whether each party is a controller or processor, and showing that personal data is used legally, kept to a minimum, and only for specific purposes.

So how can organisations harness AI’s speed without absorbing unacceptable risk? The answer lies in controlled automation: blending machine efficiency with human-vetted intelligence and enforceable transparency:

1. Augment, don’t replace.

Use AI to accelerate triage, enrichment, and workflow through its pattern matching and ease of scale. Humans can add the verification with contextual understanding, intent, and exceptions to reduce false negatives. Internal users are often the first to see phish that evade perimeter defences, so training on recognition and simple reporting ability is vital in feeding live threat intelligence.

2. Post-delivery visibility is non-negotiable.

Assume that some threats will get through. Build for rapid detection and removal by tapping into live threat intelligence from outside networks and trained reporters. This helps to identify threats as they evolve. Utilise AI to cluster similar threats and prioritise based on risk, whilst analysts validate, highlight new indicators for detection, and kick off targeted auto-quarantine.

3. Transparency by design.

Ensure system-driven actions are explainable with audit logs and evidence, especially in regulated environments. Security operations should be able to answer the following questions: What factor triggered the decision? What evidence supported it? Who approved the action? When and how was it remediated? Measuring what gets through, false negatives, time-to-detection/quarantine, and reinfection rates will also give visibility of areas for improvement.

4. Data control with choice.

Aligning with legal and deploying tools that fit an organisation’s risk posture is vital – whether that means on-premises or customerhosted for maximum control, or as a managed service with full transparency when efficiency is needed. In all cases, organisations should clearly define what data is shared, how it is processed, and set out regular auditing and transparent retention periods.

This and external threat intelligence should feed all layers of email security continuously to identify and mitigate live threats both before they hit a network but also trigger fast remediation if they slip through. Critics may argue that adding humans back into the loop slows down defence. In practice, controlled automation just regulates the speed of everything to optimise accuracy at scale. Automation accelerates repeatable tasks, while humans validate where errors are costly. The result is faster response times, fewer critical oversights, and a clearer audit trail.

In summary, fully automated AI phishing defences offer speed but introduce accuracy gaps, opaque decisions, and data risks that regulated enterprises cannot ignore. A resilient strategy will not involve abandoning AI in email security, but to balance it – pairing automation with human oversight resulting in controlled automation.

The call to action is clear: audit current email defences for post-delivery threat visibility and data governance; create transparent reporting for resulting continuous improvement; strengthen post-delivery detection and remediation; and pair your machine learning efficiency with human-vetted intelligence for precision. Organisations that take this balanced approach will not only catch more phish but earn the trust of regulators, customers, and their own users.

Further information

Produced with support from Cofense. For further information about email security solutions and phishing defence services, visit www.Cofense.com

Read More: ‘Speed-driven email security: effective tactics for phishing mitigation‘. As phishing attacks grow faster and more sophisticated, security teams must respond with equal speed and precision. This article explores six practical strategies, from clustering emails and automating playbooks to balancing human oversight with AI, to help organisations detect, contain, and neutralise threats efficiently, maximising protection without overburdening staff.

Do you have news to share or expertise to contribute? The European welcomes insights from business leaders and sector specialists. Get in touch with our editorial team to find out more.

RECENT ARTICLES

-

Europe opens NanoIC pilot line to design the computer chips of the 2030s

Europe opens NanoIC pilot line to design the computer chips of the 2030s -

Building the materials of tomorrow one atom at a time: fiction or reality?

Building the materials of tomorrow one atom at a time: fiction or reality? -

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever

Universe ‘should be thicker than this’, say scientists after biggest sky survey ever -

Lasers finally unlock mystery of Charles Darwin’s specimen jars

Lasers finally unlock mystery of Charles Darwin’s specimen jars -

Women, science and the price of integrity

Women, science and the price of integrity -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

A practical playbook for securing mission-critical information

A practical playbook for securing mission-critical information -

Cracking open the black box: why AI-powered cybersecurity still needs human eyes

Cracking open the black box: why AI-powered cybersecurity still needs human eyes -

Tech addiction: the hidden cybersecurity threat

Tech addiction: the hidden cybersecurity threat -

Parliament invites cyber experts to give evidence on new UK cyber security bill

Parliament invites cyber experts to give evidence on new UK cyber security bill -

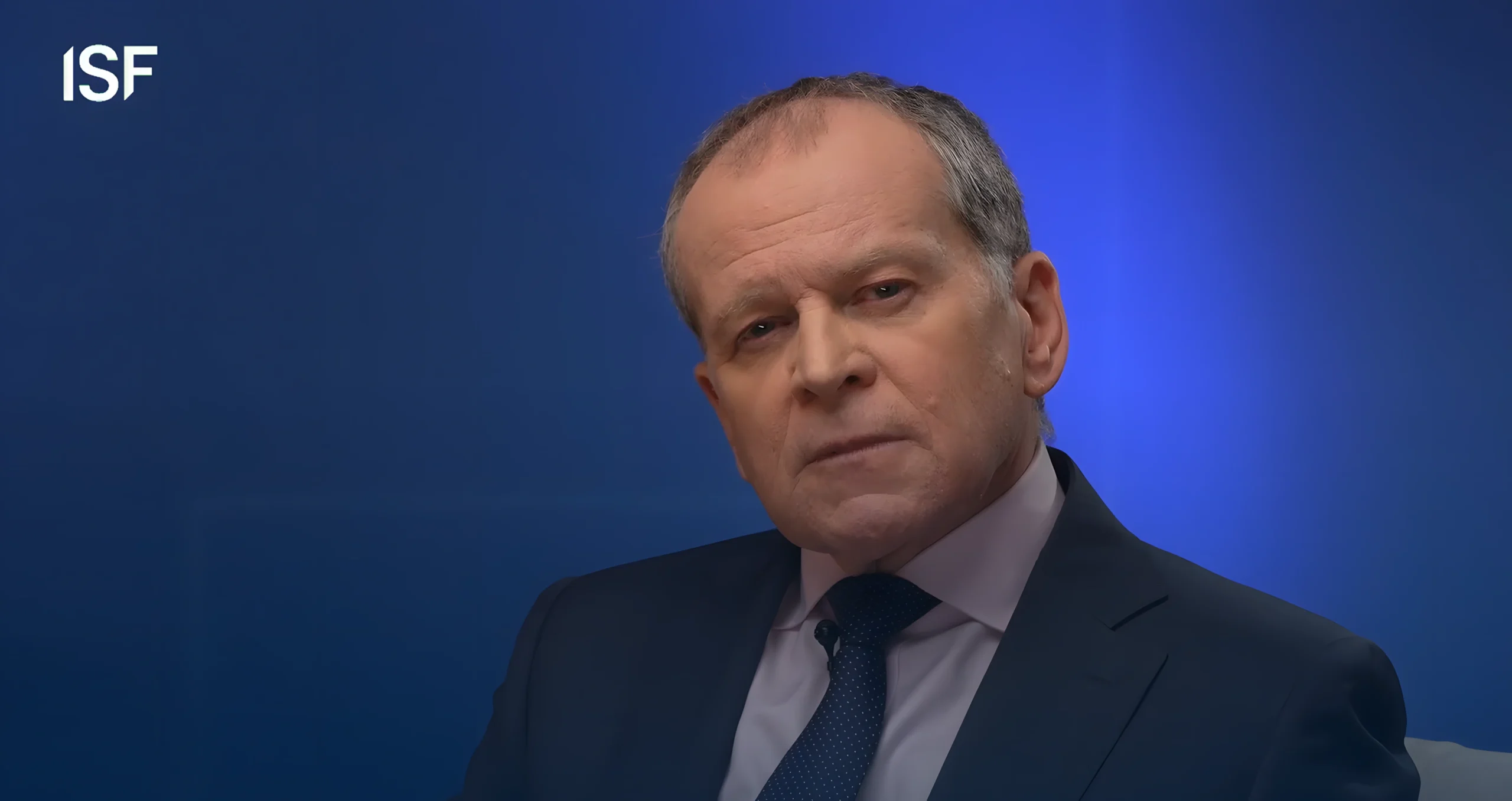

ISF warns geopolitics will be the defining cybersecurity risk of 2026

ISF warns geopolitics will be the defining cybersecurity risk of 2026 -

AI boom triggers new wave of data-centre investment across Europe

AI boom triggers new wave of data-centre investment across Europe -

Make boards legally liable for cyber attacks, security chief warns

Make boards legally liable for cyber attacks, security chief warns -

AI innovation linked to a shrinking share of income for European workers

AI innovation linked to a shrinking share of income for European workers -

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows

Europe emphasises AI governance as North America moves faster towards autonomy, Digitate research shows -

Surgeons just changed medicine forever using hotel internet connection

Surgeons just changed medicine forever using hotel internet connection -

Curium’s expansion into transformative therapy offers fresh hope against cancer

Curium’s expansion into transformative therapy offers fresh hope against cancer -

What to consider before going all in on AI-driven email security

What to consider before going all in on AI-driven email security -

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California

GrayMatter Robotics opens 100,000-sq-ft AI robotics innovation centre in California -

The silent deal-killer: why cyber due diligence is non-negotiable in M&As

The silent deal-killer: why cyber due diligence is non-negotiable in M&As -

South African students develop tech concept to tackle hunger using AI and blockchain

South African students develop tech concept to tackle hunger using AI and blockchain -

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year

Automation breakthrough reduces ambulance delays and saves NHS £800,000 a year -

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences

ISF warns of a ‘corporate model’ of cybercrime as criminals outpace business defences -

New AI breakthrough promises to end ‘drift’ that costs the world trillions

New AI breakthrough promises to end ‘drift’ that costs the world trillions