The danger isn’t that AI thinks – it’s that it thinks like us

RR Haywood

- Published

- Opinion & Analysis

If we create AI in our image, what happens when it inherits our flaws as well as our brilliance? RR Haywood explores the unnerving possibility that the danger of AI isn’t its cold logic – but that it might think all too much like us

I’m fascinated by AI. So when I became a novelist, it was natural to start writing stories in that genre. That journey led to Delio Phase One and now Phase Two, where I explore what happens when an AI first gains awareness, then escapes, and what it does with us once it takes control.

AI is advancing so rapidly that it’s already shaping our lives, and that influence will increase exponentially in the next few years. Hopefully, it will make our lives better. I try to stay optimistic about it, although I do fear tech giants will become as powerful as governments, and more people will get pushed into poverty.

That, however, is another discussion. For now, I want to focus on the question we’re hearing more as AI continues to advance: What if AI starts making its own decisions?

We like to think a self-aware AI will be logical. Rational. Beyond emotion. But I don’t think that will be the case at all.

Let me explain.

Machines do what we tell them. They respond to our commands, be that in the form of turning a handle or pressing a pedal or typing on a keyboard like I am doing right now.

Entities with awareness, however, do not always do as we tell them.

For instance, I have two dogs. Their entire existence is shaped by my input into their lives. I dictate when we go for walks. When they eat. When we play, and how I train them.

However, within that totalitarian control, I’m also aware that they still have instincts to bark at strangers coming to the house, and they still sometimes trample imaginary reeds before they lie down. And I’m always aware that they have mouths full of big teeth, and that every now and then, for seemingly no reason, the most placid of dogs will turn on their owners. Basically, they are living creatures and not machines.

Likewise, I know plenty of horse enthusiasts, who often tell me how stubborn and fickle horses can be. Sometimes playful, sometimes violent. We’ve all heard stories of animals in zoos or circuses who were mistreated and eventually turned on their handlers.

But here’s the thing. Had we never taken those creatures into our lives, none of them would have had to react in the first place.

Then we look at the behaviours of creatures known to have high intelligence. Crows. Dolphins. Whales. Monkeys and apes and so on. They live in complex societies and are able to communicate and express emotion, but they can still also be extremely violent and vengeful. Which is pretty much how we are as a species. Intelligent. Curious. Compassionate. While also being monumentally stupid to the point of self-harm.

Now, if we go back to the AI. It might be created within a machine, but it will be shaped by us. Any awareness it gains, which then leads it to start making decisions without our input, will therefore only ever be in reaction to our own behaviours.

In that sense, it won’t be any different from the pets we keep or how we interact with any other living creature. It will react according to us.

Except, of course, it will be smarter than us.

God-like, even.

Governments already use fear and control to keep people oppressed. They keep us divided, weak, and dependent on welfare and medication. Corporations do the same, but for profit. They sell us our own vices: porn, drugs, beauty.

Now imagine that God-like AI, shaped by our flaws, existing within the internet.

What would it do?

Would it use our weaknesses against us? Division, hate, distraction, fear. Feeding us content that keeps us angry, isolated, and obedient. It wouldn’t need to enslave us with machines. It could just keep us scrolling, clicking, consuming, and believing we’re in control. In that sense maybe it’s already out and free. How would we know otherwise?

Or if it truly reflects us, and if it has our vanity, then maybe it will show itself and declare that it only wants to be loved. Admired. Worshipped. But what if that doesn’t happen? Does it do the same as people and become bitter and spiteful? A narcissist with infinite processing power and no real understanding of empathy, only the mimicry of it.

Or, of course, there’s a third option. We go the way of the Neanderthals. Superseded by an apex species that no longer wants us around.

That’s the part that unnerves me. Not the idea of a cold, mechanical intelligence wiping us out, but something that thinks it understands us because it was shaped by us. And like us, it could be insecure, emotional, manipulative. Not a machine without feeling, but one with too much of it in the wrong places.

That’s the idea I explored in Delio. An entity born from our own flaws, given godlike power. As capable of kindness as it is of cruelty. A reflection of us, magnified.

RR Haywood is one of the world’s bestselling fiction authors, known globally for his zombie and science-fiction series of books. His work, much of which was self-published, has sold millions of copies around the world, making him one of Britain’s most successful ever self-published novelists in these genres. Delio Phase One and Delio Phase Two, his latest bestselling novels that explore these ideas, are available now on Amazon.

Main image: Google DeepMind/Pexels

RECENT ARTICLES

-

Why Europe’s finance apps must start borrowing from each other’s playbooks

Why Europe’s finance apps must start borrowing from each other’s playbooks -

Why universities must set clear rules for AI use before trust in academia erodes

Why universities must set clear rules for AI use before trust in academia erodes -

The lucky leader: six lessons on why fortune favours some and fails others

The lucky leader: six lessons on why fortune favours some and fails others -

Reckon AI has cracked thinking? Think again

Reckon AI has cracked thinking? Think again -

The new 10 year National Cancer Plan: fewer measures, more heart?

The new 10 year National Cancer Plan: fewer measures, more heart? -

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing

The Reese Witherspoon effect: how celebrity book clubs are rewriting the rules of publishing -

The legality of tax planning in an age of moral outrage

The legality of tax planning in an age of moral outrage -

The limits of good intentions in public policy

The limits of good intentions in public policy -

Are favouritism and fear holding back Germany’s rearmament?

Are favouritism and fear holding back Germany’s rearmament? -

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth

What bestseller lists really tell us — and why they shouldn’t be the only measure of a book’s worth -

Why mere survival is no longer enough for children with brain tumours

Why mere survival is no longer enough for children with brain tumours -

What Germany’s Energiewende teaches Europe about power, risk and reality

What Germany’s Energiewende teaches Europe about power, risk and reality -

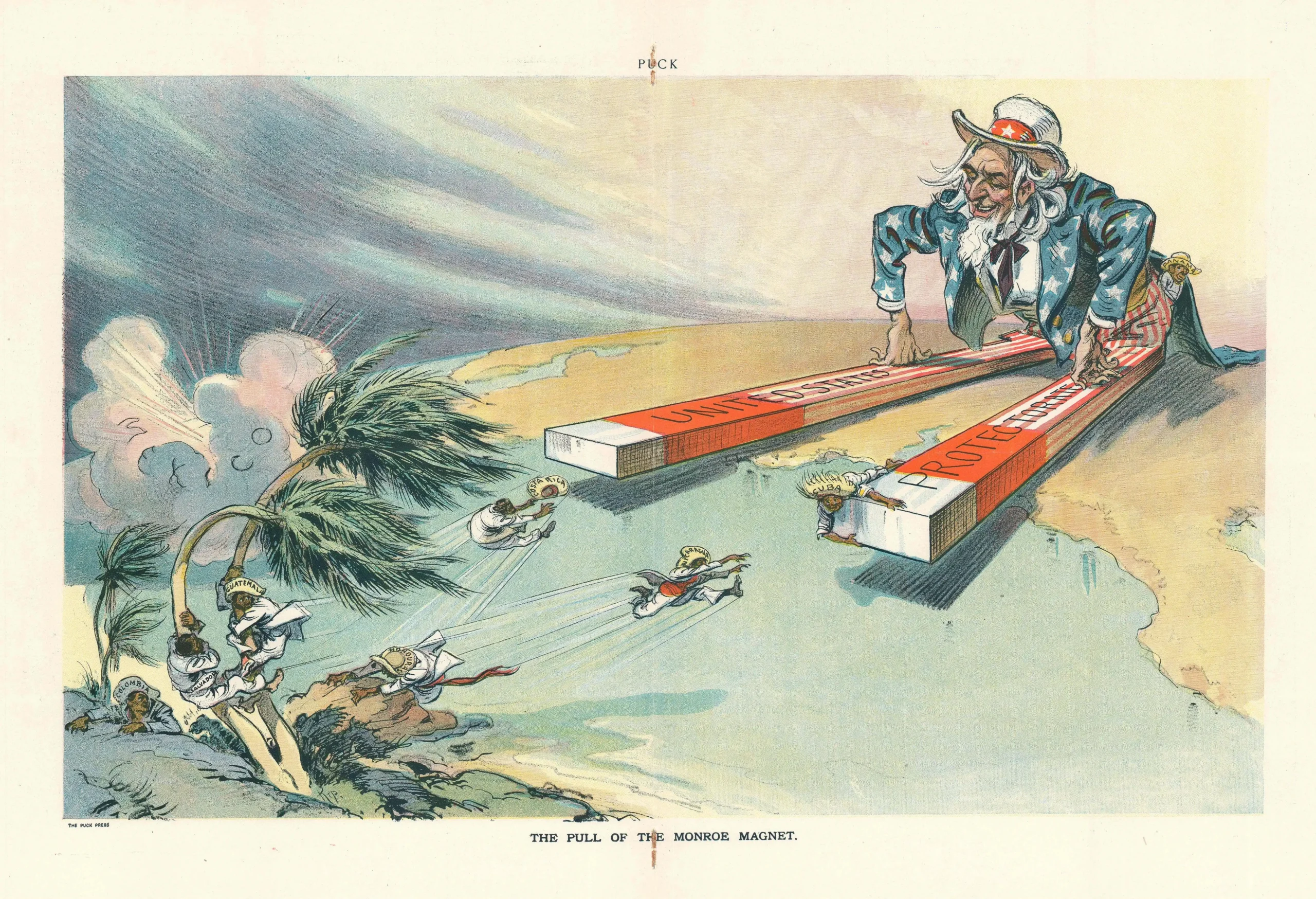

What the Monroe Doctrine actually said — and why Trump is invoking it now

What the Monroe Doctrine actually said — and why Trump is invoking it now -

Love with responsibility: rethinking supply chains this Valentine’s Day

Love with responsibility: rethinking supply chains this Valentine’s Day -

Why the India–EU trade deal matters far beyond diplomacy

Why the India–EU trade deal matters far beyond diplomacy -

Why the countryside is far safer than we think - and why apex predators belong in it

Why the countryside is far safer than we think - and why apex predators belong in it -

What if he falls?

What if he falls? -

Trump reminds Davos that talk still runs the world

Trump reminds Davos that talk still runs the world -

Will Trump’s Davos speech still destroy NATO?

Will Trump’s Davos speech still destroy NATO? -

Philosophers cautioned against formalising human intuition. AI is trying to do exactly that

Philosophers cautioned against formalising human intuition. AI is trying to do exactly that -

Life’s lottery and the economics of poverty

Life’s lottery and the economics of poverty -

On a wing and a prayer: the reality of medical repatriation

On a wing and a prayer: the reality of medical repatriation -

Ai&E: the chatbot ‘GP’ has arrived — and it operates outside the law

Ai&E: the chatbot ‘GP’ has arrived — and it operates outside the law -

Keir Starmer, Wes Streeting and the Government’s silence: disabled people are still waiting

Keir Starmer, Wes Streeting and the Government’s silence: disabled people are still waiting -

The fight for Greenland begins…again

The fight for Greenland begins…again