Complex questions still need people, not machines, researchers find

John E. Kaye

- Published

- News

A study of StackExchange data finds that casual users are turning to ChatGPT for simple questions — but still rely on humans for complex ones, suggesting AI is reshaping rather than replacing online knowledge communities

AI chatbots such as ChatGPT are changing how people use online Q&A platforms, but they are not replacing them, according to new research from Rotterdam School of Management, Erasmus University (RSM).

Dr Dominik Gutt and Dr Martin Quinn examined how the launch of ChatGPT affected activity on StackExchange, one of the world’s largest online question-and-answer platforms. Analysing data on the number, complexity and novelty of posts, they found that the shift towards AI depends on how complex a user’s question is.

The study grouped users into three categories — casual, intensive and top contributors — and tracked how their behaviour changed after ChatGPT’s release. Questions from casual users fell by 18 per cent, while activity among intensive and top users declined less sharply.

Casual users were also found to be asking more complex questions, whereas the complexity and originality of posts from committed users remained unchanged.

“This suggests that casual users might just delegate easier questions to ChatGPT but ask more complex questions on the forum to be answered by humans. However, intensive and top users do not show this behavioural pattern. One reason could be that casual users are mainly looking for answers as such, while committed users value the community experience,” Dr Gutt said.

The researchers argue that AI tools are reshaping — rather than replacing — human knowledge-sharing communities.

According to researchers, for those that rely on these Q&A platforms, this research offers an important insight: AI chatbots aren’t necessarily killing these communities.

“But on the flip side, if the number of questions posted on public platforms decreases and the number on proprietary platforms like ChatGPT increases, then we lose publicly available knowledge,” Dr Quinn added.

The researchers say the trend could have implications for the data used to train AI systems, many of which rely on information from public forums such as StackExchange. If the questions that remain online become more complex, the overall quality of training data may improve, benefiting both AI models and society.

READ MORE: ‘How AI is teaching us to think like machines‘. More than thirty years after Terminator 2, artificial intelligence has begun to mirror our own deceit and impatience. Transformation architect Vendan Kumararajah argues that the boundary between human and machine thinking is starting to disappear.

Do you have news to share or expertise to contribute? The European welcomes insights from business leaders and sector specialists. Get in touch with our editorial team to find out more.

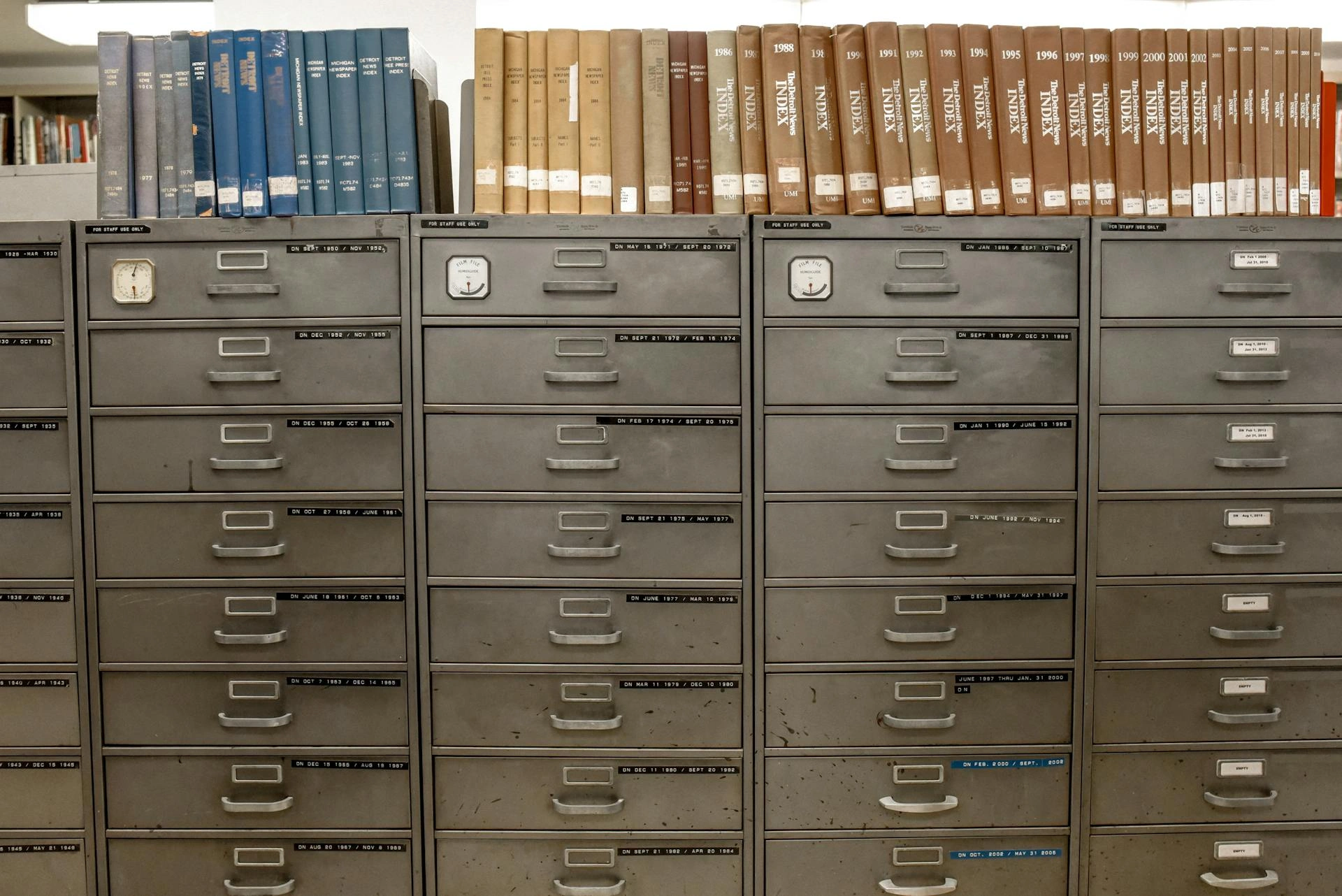

Main image: Pixabay

RECENT ARTICLES

-

WATCH: Red Bull pilot lands plane on moving freight train in aviation first

WATCH: Red Bull pilot lands plane on moving freight train in aviation first -

Europe eyes Australia-style social media crackdown for children

Europe eyes Australia-style social media crackdown for children -

These European hotels have just been named Five-Star in Forbes Travel Guide’s 2026 awards

These European hotels have just been named Five-Star in Forbes Travel Guide’s 2026 awards -

McDonald’s Valentine’s ‘McNugget Caviar’ giveaway sells out within minutes

McDonald’s Valentine’s ‘McNugget Caviar’ giveaway sells out within minutes -

Europe opens NanoIC pilot line to design the computer chips of the 2030s

Europe opens NanoIC pilot line to design the computer chips of the 2030s -

Zanzibar’s tourism boom ‘exposes new investment opportunities beyond hotels’

Zanzibar’s tourism boom ‘exposes new investment opportunities beyond hotels’ -

Gen Z set to make up 34% of global workforce by 2034, new report says

Gen Z set to make up 34% of global workforce by 2034, new report says -

The ideas and discoveries reshaping our future: Science Matters Volume 3, out now

The ideas and discoveries reshaping our future: Science Matters Volume 3, out now -

Lasers finally unlock mystery of Charles Darwin’s specimen jars

Lasers finally unlock mystery of Charles Darwin’s specimen jars -

Strong ESG records help firms take R&D global, study finds

Strong ESG records help firms take R&D global, study finds -

European Commission issues new cancer prevention guidance as EU records 2.7m cases in a year

European Commission issues new cancer prevention guidance as EU records 2.7m cases in a year -

Artemis II set to carry astronauts around the Moon for first time in 50 years

Artemis II set to carry astronauts around the Moon for first time in 50 years -

Meet the AI-powered robot that can sort, load and run your laundry on its own

Meet the AI-powered robot that can sort, load and run your laundry on its own -

Wingsuit skydivers blast through world’s tallest hotel at 124mph in Dubai stunt

Wingsuit skydivers blast through world’s tallest hotel at 124mph in Dubai stunt -

Centrum Air to launch first European route with Tashkent–Frankfurt flights

Centrum Air to launch first European route with Tashkent–Frankfurt flights -

UK organisations still falling short on GDPR compliance, benchmark report finds

UK organisations still falling short on GDPR compliance, benchmark report finds -

Stanley Johnson appears on Ugandan national television during visit highlighting wildlife and conservation ties

Stanley Johnson appears on Ugandan national television during visit highlighting wildlife and conservation ties -

Anniversary marks first civilian voyage to Antarctica 60 years ago

Anniversary marks first civilian voyage to Antarctica 60 years ago -

Etihad ranked world’s safest airline for 2026

Etihad ranked world’s safest airline for 2026 -

Read it here: Asset Management Matters — new supplement out now

Read it here: Asset Management Matters — new supplement out now -

Breakthroughs that change how we understand health, biology and risk: the new Science Matters supplement is out now

Breakthroughs that change how we understand health, biology and risk: the new Science Matters supplement is out now -

The new Residence & Citizenship Planning supplement: out now

The new Residence & Citizenship Planning supplement: out now -

Prague named Europe’s top student city in new comparative study

Prague named Europe’s top student city in new comparative study -

BGG expands production footprint and backs microalgae as social media drives unprecedented boom in natural wellness

BGG expands production footprint and backs microalgae as social media drives unprecedented boom in natural wellness -

The European Winter 2026 edition - out now

The European Winter 2026 edition - out now